Learning to Fly in Seconds

YouTube Video

Click to view this content.

GitHub: https://github.com/arplaboratory/learning-to-fly

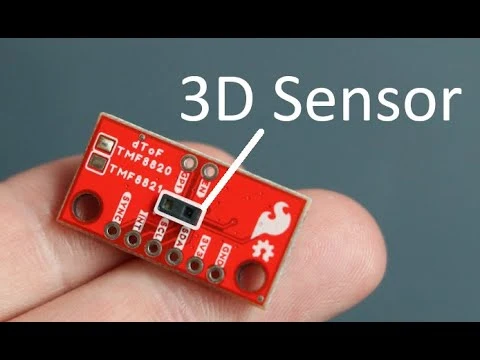

Unlocking the Performance of Proximity Sensors by Utilizing Transient Histograms

YouTube Video

Click to view this content.

These Drones Are Tracked and Controlled Using $1 Webcams and ESP32s

Joshua Bird's open source project allows users to control autonomous drones through a web interface and a series of ESP32 boards.

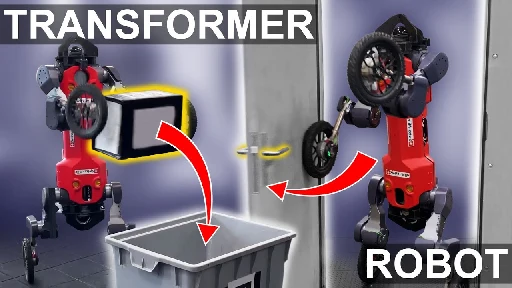

Curiosity-Driven Learning of Joint Locomotion and Manipulation Tasks

YouTube Video

Click to view this content.

TOPS (Traverser of Planar Surfaces) 12DOF quadrupedal robot

TOPS (Traverser of Planar Surfaces) or SPOT backward is a 12DOF quadrupedal robot. I have attempted robot dogs in the past, but nothing has come close to having the natural gait of an actual dog. In this project, the goal is to make a more dynamic quadrupedal robot. Website (Project Overview and Int...

Awesome Control Theory

Awesome resources for learning control theory. Contribute to A-make/awesome-control-theory development by creating an account on GitHub.

How Disney Packed Big Emotion Into a Little Robot - IEEE Spectrum

Melding animation and reinforcement learning for free-ranging emotive performances

Please, do not beg pardon. It was only a misunderstanding. You can find the creators on X. I have added the source in the description. Bests

Extreme Parkour with Legged Robots

> ... In this paper, we take a similar approach to developing robot parkour on a small low-cost robot with imprecise actuation and a single front-facing depth camera for perception which is low-frequency, jittery, and prone to artifacts. We show how a single neural net policy operating directly from a camera image, trained in simulation with largescale RL, can overcome imprecise sensing and actuation to output highly precise control behavior end-to-end. We show our robot can perform a high jump on obstacles 2x its height, long jump across gaps 2x its length, do a handstand and run across tilted ramps, and generalize to novel obstacle courses with different physical properties.

Course "Modern Robotics I: Arm-type Manipulators" by Madi Babaiasl

This repository is all the lessons for Modern Robotics I. - madibabaiasl/modern-robotics-I-course

> ... the field of robotics is still under development (it is an active research area), the basic principles of robot design (modeling, perception, planning, and control) are well understood. In Modern Robotics I, we will use both theory and practice to learn these basics specifically for arm-type manipulators. You will have the opportunity to work with a real robotic arm that is controlled by the Robot Operating System (ROS) to learn about these topics through hands-on experience.

Robotics, Vision and Control: 3rd edition in Python (2023)

Code examples for Robotics, Vision & Control 3rd edition in Python - GitHub - petercorke/RVC3-python: Code examples for Robotics, Vision & Control 3rd edition in Python

> Welcome to the online hub for the book: Robotics, Vision & Control: fundamental algorithms in Python (3rd edition) by Peter Corke, published by Springer-Nature 2023.

Jupyter Notebooks link

Pour me a drink: Robotic Precision Pouring Carbonated Beverages into Transparent Containers

This work proposes an autonomous robot system for precise pouring of various liquids into transparent containers. The approach leverages RGB input and pre-trained vision models for zero-shot capability, eliminating the need for additional data or manual annotations. Additionally, it integrates ChatGPT for user-friendly interaction, enabling easy pouring requests. The experiments prove the system's success in pouring various beverages into containers based on visual input alone.

Building a Raspberry Pi Pico and RPI4 ROS2 Robot

A Dev Robot for exploring ROS2 and Robotics using the Raspberry PI Pico and Raspberry PI 4.

Building a Raspberry Pi Pico and RPI4 ROS2 Robot

A Dev Robot for exploring ROS2 and Robotics using the Raspberry PI Pico and Raspberry PI 4.

Learned Inertial Odometry for Autonomous Drone Racing

YouTube Video

Click to view this content.

Inertial odometry is a cost-effective solution for quadrotor state estimation, but it suffers from drift. This study introduces a learning-based odometry algorithm for drone racing, combining inertial measurements with a model-based filter. Results show it outperforms other methods and has potential for agile quadrotor flight research.

Source: Davide Scaramuzza

evoBOT - inventors and developers explain the technology

YouTube Video

Click to view this content.

> The video shows the latest generation of robots, the evoBOT. The researchers and developers talk about the versatile applications of this robot. They explain how it is able to mimic human movements and adapt to different environments. They also explain the technology behind it. The evoBOT is a milestone in robotics and offers numerous advantages. For example, it can be used in industry to perform repetitive tasks. It could also play an important role in healthcare.

What is the Impact of Releasing Code with Publications? Statistics from the Machine Learning, Robotics, and Control Communities

> We found that, over a six-year period (2016-2021), the percentages of papers with code at major machine learning, robotics, and control conferences have at least doubled. Moreover, high-impact papers were generally supported by open-source codes. As an example, the top 1% of most cited papers at the Conference on Neural Information Processing Systems (NeurIPS) consistently included open-source codes. In addition, our analysis shows that popular code repositories generally come with high paper citations, which further highlights the coupling between code sharing and the impact of scientific research.

Source: Learning Sytems and Robitcs Lab

Why can Reinforcement Learning achieve results beyond Optimal Control in many real-world robotics control tasks?

A central question in robotics is how to design a control system for an agile, mobile robot. This paper studies this question systematically, focusing on a c...

>RL's most impressive achievements are beyond the reach of existing optimal control-based systems. However, less attention has been paid to the systematic study of fundamental factors that have led to the success of reinforcement learning or have limited optimal control. This question can be investigated along the optimization method and the optimization objective. Our results indicate that RL outperforms OC because it optimizes a better objective: OC decomposes the problem into planning and control with an explicit intermediate representation, such as a trajectory, that serves as an interface. This decomposition limits the range of behaviors that can be expressed by the controller, leading to inferior control performance when facing unmodeled effects. In contrast, RL can directly optimize a task-level objective and can leverage domain randomization to cope with model uncertainty, allowing the discovery of more robust control responses. This work is a significant milestone in agile robotics and sheds light on the pivotal roles of RL and OC in robot control.

Source: Davide Scaramuzza