- gizmodo.com Microsoft Study Finds Relying on AI Kills Your Critical Thinking Skills

Researchers from Microsoft and Carnegie Mellon University warn that the more you use AI, the more your cognitive abilities deteriorate.

Crossposting from lemm.ee's technology community

Hahahahaha. At least they had the balls to publish and host it themselves.

- newsocialist.org.uk AI: The New Aesthetics of Fascism

It's embarrassing, destructive, and looks like shit: AI-generated art is the perfect aesthetic form for the far right.

- pivot-to-ai.com The UK’s ‘turbocharged’ AI initiative — bringing data center noise to a village near you

Bitcoin mining in the US is notorious for inflicting ridiculously noisy data centers on small towns across the country. We can bring these deafening benefits to towns and villages across the UK — a…

- pivot-to-ai.com Thomson Reuters wins AI training copyright case — what this does and doesn’t mean

Thomson Reuters has won its copyright case against defunct legal startup Ross Intelligence, who trained an AI model on headnotes from Westlaw. [Wired, archive; opinion, PDF, case docket] This was a…

- pivot-to-ai.com AI chatbots are still hopelessly terrible at summarizing news

BBC News has run as much content-free AI puffery as any other media outlet. But they had their noses rubbed hard in what trash LLMs were when Apple Intelligence ran mangled summaries of BBC stories…

- pivot-to-ai.com Elon Musk’s DOGE turns to AI to accelerate its destructive incompetence

You’ll be delighted to hear that Elon Musk’s Department of Government Efficiency and the racist rationalist idiot kids he’s hired to do the day-to-day of destruction are heavily into “AI,” because …

- pivot-to-ai.com Microsoft research: Use AI chatbots and turn yourself into a dumbass

You might think using a chatbot to think for you just makes you dumber — or that chatbots are especially favored by people who never liked thinking in the first place. It turns out the bot users re…

-

Andrew Molitor on "AI safety". "people are gonna hook these dumb things up to stuff they should not, and people will get killed. Hopefully the same people, but probably other people."

nonesense.substack.com AI SafetyThe current state of the art of AI safety research is mainly of two sorts: “what if we build an angry God” and “can we make the thing say Heil Hitler?” Neither is very important, because in the first place we’re pretty unlikely to build a God, and in the second place, who cares?

- pivot-to-ai.com DeepSeek roundup: banned by governments, no guard rails, lied about its training costs

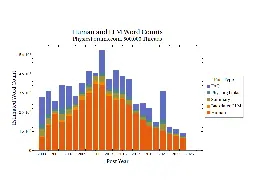

Of course DeepSeek lied about its training costs, as we strongly suspected. SemiAnalysis has been following DeepSeek for the past several months. High Flyer, DeepSeek’s owner, was buying Nvidia GPU…

- pivot-to-ai.com OpenAI does a Super Bowl ad, Google’s ad uses bad stats from Gemini

When you advertise at the Super Bowl, you’ve reached just about every consumer in America. It’s the last stop. If you’re not profitable yet, you never will be. Back in the dot-com bubble, Pets.com …

- pivot-to-ai.com How to remove Copilot AI from your Office 365 subscription: hit ‘cancel’

Here in the UK, Microsoft is now helpfully updating your Office/Microsoft/Copilot 365 subscription to charge you for the Copilot AI slop generator! Being Microsoft, they’re making the opt-out stupi…

- pivot-to-ai.com Do be evil: Google now OK with AI for weapons and surveillance — for ‘freedom, equality, and human rights’

Good news! Google has determined that “responsible AI” is in no way incompatible with selling its AI systems for weaponry or surveillance. Google don’t state this directly — they just quietly remov…

-

Iris Meredith: Licking the AI Boot

"the AI thread in our society now is nothing more or less than a demand by the wealthy creators of it for submission. It's a naked show of force by the powerful and stupid: you will use this tool, and you will like it."

- pivot-to-ai.com Forum admin’s madness, AI edition: the old Physics Forums site fills with generated slop

In 2025, the web is full of slop. Some propose going back to 2000s-style forums. But watch out for 2025 admins. Physics Forums dates back to 2001. Dave and Felipe of Hall Of Impossible Dreams notic…

-

What is the charge? Eating an LLM? A succulent chinese LLM? Deepseek judo-thrown out of Australian government devices

OFC if there were any real sense or justice in the world, LLMs would be banned outright.

- pivot-to-ai.com Oh no! AI can replicate itself! … when you tell it to, and you give it copies of all its files

“Frontier AI systems have surpassed the self-replicating red line” is the sort of title you give a paper for clickbait potential. An LLM, a spicy autocomplete, can now produce copies of itself! App…

-

Stubsack: weekly thread for sneers not worth an entire post, week ending 9th February 2025

Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

> The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be) > > Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

- pivot-to-ai.com Skynet, but it’s Wheatley: OpenAI sells ‘reasoning’ AI to US government for nuclear weapon security

In hot pursuit of those juicy defense dollars, OpenAI has signed a new contract with the U.S. National Laboratories to “supercharge their scientific research” with OpenAI’s latest o1-series confabu…

- pivot-to-ai.com Cleveland police use AI facial recognition — and their murder case collapses

The only suspect in a Cleveland, Ohio, murder case is likely to walk because the police relied on Clearview AI’s facial recognition to get a warrant on them — despite Clearview warning specifically…

- www.technologyreview.com DeepSeek might not be such good news for energy after all

New figures show that if the model’s energy-intensive “chain of thought” reasoning gets added to everything, the promise of efficiency gets murky.

> In the week since a Chinese AI model called DeepSeek became a household name, a dizzying number of narratives have gained steam, with varying degrees of accuracy [...] perhaps most notably, that DeepSeek’s new, more efficient approach means AI might not need to guzzle the massive amounts of energy that it currently does. > > The latter notion is misleading, and new numbers shared with MIT Technology Review help show why. These early figures—based on the performance of one of DeepSeek’s smaller models on a small number of prompts—suggest it could be more energy intensive when generating responses than the equivalent-size model from Meta. The issue might be that the energy it saves in training is offset by its more intensive techniques for answering questions, and by the long answers they produce. > > Add the fact that other tech firms, inspired by DeepSeek’s approach, may now start building their own similar low-cost reasoning models, and the outlook for energy consumption is already looking a lot less rosy.

-

Lol. Lmao even. "DeepSeek R1 reproduced for $30: Berkeley researchers replicate DeepSeek R1 for $30—casting doubt on H100 claims and controversy"

Sam "wrong side of FOSS history" Altman must be pissing himself.

Direct Nitter Link:

https://nitter.lucabased.xyz/jiayi_pirate/status/1882839370505621655

- pivot-to-ai.com DeepSeek AI leaves glaring security hole exposing user data, Italy blocks DeepSeek

DeepSeek provided a hilarious back-to-earth moment for the American AI-VC-industrial complex earlier this week. But if you first assume everyone in the AI bubble is a grifter of questionable compet…

- arstechnica.com “Just give me the f***ing links!”—Cursing disables Google’s AI overviews

The latest trick to stop those annoying AI answers is also the most cathartic.

-

a handy list of LLM poisoners

tldr.nettime.org ASRG (@[email protected])Attached: 1 image Sabot in the Age of AI Here is a curated list of strategies, offensive methods, and tactics for (algorithmic) sabotage, disruption, and deliberate poisoning. 🔻 iocaine The deadliest AI poison—iocaine generates garbage rather than slowing crawlers. 🔗 https://git.madhouse-projec...

-

Deepseek Tianenmen square controversy gets weirder

So I was just reading this thread about deepseek refusing to answer questions about Tianenmen square.

It seems obvious from screenshots of people trying to jailbreak the webapp that there's some middleware that just drops the connection when the incident is mentioned. However I've already asked the self hosted model multiple controversial China questions and it's answered them all.

The poster of the thread was also running the model locally, the 14b model to be specific, so what's happening? I decide to check for myself and lo and behold, I get the same "I am sorry, I cannot answer that question. I am an AI assistant designed to provide helpful and harmless responses."

Is it just that specific model being censored? Is it because it's the qwen model it's distilled from that's censored? But isn't the 7b model also distilled from qwen?

So I check the 7b model again, and this time round that's also censored. I panic for a few seconds. Have the Chinese somehow broken into my local model to cover it up after I downloaded it.

I check the screenshot I have of it answering the first time I asked and ask the exact same question again, and not only does it work, it acknowledges the previous question.

So wtf is going on? It seems that "Tianenmen square" will clumsily shut down any kind of response, but Tiananmen square is completely fine to discuss.

So the local model actually is censored, but the filter is so shit, you might not even notice it.

It'll be interesting to see what happens with the next release. Will the censorship be less thorough, stay the same, or will china again piss away a massive amount of soft power and goodwill over something that everybody knows about anyway?

- pivot-to-ai.com Official Israeli AI Twitter bot backfires, makes pro-Palestinian posts, trolls other official accounts

Israel relies heavily on social media to promote official viewpoints, especially in the current conflict in Gaza. One Israeli Twitter bot, @FactFinderAI, is for “countering misinformation with know…

- pivot-to-ai.com DeepSeek slaps OpenAI, tech stocks crash

DeepSeek announced its new R1 model on January 20. They released a paper on how R1 was trained on January 22. Over the weekend, the DeepSeek app became the number-one download on the Apple App Stor…

-

elon musk's obsession with the tic tac toe of video games

We all know Elon Musk as the funniest man alive.

but did you know that Musk is also a lifelong gamer? for example, here he is in the chess club of his whites only apartheid prep school.

!black and white photo of some kids in suits

grew out of that though.

really want to highlight the phrase "understandable when all we had to play with were squirrels and rocks." what the fuck is he talking about?

in any case, he clearly has some issues around the game and I suspect his performance in the chess club. the dude loves to downplay the complexity of the game.

!musk tweet: Chess is such a simple game

!musk tweet: This is cool, but I am quite certain that Chess can be fully solved, like Checkers

he knows how to tune it up, though.

hmm. fog of war, tech trees, randomized starting positions. that actually sounds a little familiar. could it be...?

OH YEAH BABY! you knew it had to be polytopia!

what the fuck is polytopia?

this is polytopia:

!low poly computer game image. isometric view of a tiled landscape

sophisticated Gamers will notice three things here:

-

this is clearly a mobile game first, meaning it was developed to fit into the industry's nastiest shithole: the ios and android app markets. i'll tell you right now that polytopia doesn't have gambling in its microtransactions (which instantly makes it cream of the crop), but you can expect it to be braindead and shallow.

-

this game is for children. look the bright primary colors, the character's huge eyes, and the low poly style, which evokes minecraft.

-

this game looks a LOT like civilization. specifically, it looks like civilization 3:

For those not familiar, the civilization series is the standard bearer for the 4X genre. it's a style of slow, macromanagement focused turn based strategy focused on the "four Xes" of exploration, expansion, exploitation, and extermination. thematically, they present societies as machines for producing imperialism. the civilization games are also fearsomely complex and famously deep.

not so with polytopia:

so yeah, it's baby civilization. but the thing that really kills me is that musk is obsessed with this game. like... really obsessed, according to a story published on yahoo finance.

This puff piece is sourced from "Benzinga," a tech finance news site that seems to mostly post about crypto, ai and tesla. context clues suggest the content is entirely ripped from walter isaacson's biography of musk. I have to emphasize that all of this is intended to be flattering - right in the middle, they take a break from the content to shill some affiliate link I won't click on:

that's the image of musk they want to convey. anything they include is in support of that.

saying "I am just wired for war" because you play a lot of polytopia is classic elon cringe. beating a game's developer is nothing to brag about, by the way. most game developers are terrible at their own games.

i would argue that ragequitting to the parking lot to smack down your employee/mother of your children in a baby game is also not something to brag about:

!Elon Musk and Neuralink executive Shivon Zilis conceived twins through IVF: report

flexing on mom almost shows up more than once in the article...

... except, twist, for some reason he beat his employee but not his partner???

and yes, he sulked like a little shitbag he when he lost. one has to suspect that if his sycophants weren't constantly throwing polytopia matches against him he'd have turned on it just like he did chess.

before you read this next bit I want to emphasize again that EVERYTHING IN THIS ARTICLE IS INTENDED TO BE FLATTERING.

his brother seems to feel the same way

(and from another benzinga sourced yahoo finance story):

here's what i think: elon musk isn't learning shit from polytopia. he's a profoundly stupid and childish man who likes moving brightly colored blocks around and being told he won. he's projecting the disgusting and wretched pile of deficiencies which is his personality onto a game for babies. as someone who only fails upward and cannot stand to fail, it is impossible for him to learn from reality; doing so would require facing what is too painful to face.

-

- pivot-to-ai.com How to promote AI: make the ‘AI illiterate’ think it’s magic

How do we sell the public on the wonders of AI? You might foolishly think that we need to inform them of its incredible advantages or something. Ha! The paper “Lower Artificial Intelligence Literac…

-

Stubsack: weekly thread for sneers not worth an entire post, week ending 2nd February 2025

Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

> The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be) > > Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Semi-obligatory thanks to @dgerard for starting this.)

- pivot-to-ai.com DeepSeek R1 is wildly overhyped, but you can try it at home

DeepSeek is a Chinese startup that’s massively hyped as a rival to OpenAI. DeepSeek claims its R1 model, released January 20, is comparable to OpenAI o1 — and R1 is open source! DeepSeek sell…

- pivot-to-ai.com AI doom cranks present new AI benchmark ‘Humanity’s Last Exam’ — be afraid!

The Center for AI Safety, an AI doom crank nonprofit, and Scale AI have released a new AI benchmark called “Humanity’s Last Exam.” This supposedly tests “world-class expert-level reasoning and know…

-

McSweeneys: Our Customers Demand Terrible AI Systems

www.mcsweeneys.net Our Customers Demand Terrible AI SystemsWe’ve been banging our heads against the wall, trying to think of the new “it” thing our customers want. At one point, somebody suggested improving...

-

US Congress proposes bill to allow AI to prescribe drugs and medical treatment

US Congress proposed bill to allow AI to prescribe drugs and medical treatment

Original post from the Fuck AI community: https://lemmy.world/post/24681591

The fact that this has even been proposed is horrifying on so many fucking levels. Technically it has to be approved by the state invovled and the FDA, but opening this door even a crack is so absurdly out of touch with reality.

- www.nbcnews.com AI weapon detection system at Antioch High School failed to detect gun in Nashville shooting

A district official said the system failed to detect the shooter's handgun because of where cameras were in the school.

...because the shooter hid it from the cameras. What a shocker.

- pivot-to-ai.com OpenAI launches Operator agent, Stargate money will go only to OpenAI

On Monday, OpenAI launched a “research preview” of Operator, an AI agent that browses the web — “you give it a task and it will execute it” — for anyone paying $200/month for ChatGPT Pro. [OpenAI] …