https://static.xtremeownage.com/blog

46 watts.. but, yea, I expected lower.

But, suppose when its spinning 4x seagate exos, they like their juice.

It apparently doesn't allow HDD hibernation while containers are running, and doesn't appear to like to use any sleep states.

Key word, is idle.

Synology... and HDD hibernation don't really go together very well. If you have containers running, it won't let the HDDs hibernate at all. And- I have a minio instance running.

Cars, Computer, and Code.

Unless you have a wall of old nokia phones..... it should be quite scary.

Eh... Mutually assured destruction.

It's a very scary phrase.

That, is a pretty good deal. Better start picking up some MD1200s!

Nope, not at all.

Behind every successful story, is a lot of failures. (or- really rich parents).

I agree, I'd be picking up a bunch of those, if that were the case.

esp32-c6 (supports zigbee), is pretty cheap.

No.

I wouldn't vote for Hillary period for many reasons. Her sex is not one of them.

A random fact, I actually did vote for a woman to be president. But, it damn sure was not Hillary. There is too much stink associated with her. Too much shit swept under the rug.

The other admin now "owns" this instance, and hosts it in the EU.

I am just a glorified moderator now.

I'd say, you have a small instance.

I used to host lemmyonline.com, which has somewhere around 50-100 users.

It was upwards of 50-80g of disk space, and it used a pretty good chunk of bandwidth. CPU/Memory requirements were not very high though.

I'd gladly donate a few TB, but Not about to fill my entire array for books i'll never read...

Nope.

Still just feel like a kid, with extra responsibilities, while raising my own kids. Guess sometime around 50 or so i'll start feeling like an "adult"

Although, at least I call myself a dumbass, after doing something stupid, or wasting money on crap.

This platform* is getting overrun by trolls and tankies. I’m going back to Reddit

Anti-DDOS, eh?

You lost me there. There is no self-hosted anti-ddos solution that is going to be effective.... Because any decent DDOS attack, can easily completely overwhelm your WAN connection. (And potentially even your ISP's upstream(s) )#

It could end up being a shart.

But, it has no network connectivity! That is against God's will.

(Thus, no telemetry either)

Very interesting os though. Lots of very cool concepts

Not a clue.

Maybe they like the pretty dashboard pihole has.

I use vlans to work with it.

Lemmyonline.com, will go offline 2023-09-04

As promised, if I brought the instance offline, I would give you a heads up in advance.

Here are the reasons for me coming to this decision-

Moderation / Administration

Lemmy has absolutely ZERO administration tools, other then the ability to create a report. This, makes it extremely difficult to properly administer anything.

As well, other then running reports and queries against the local database manually, I literally do not have insight into anything. I can't even see a list of which users are registered on this instance, without running a query on the database.

Personal Liability

I host lemmyonline.com on some of my personal infrastructure. It shares servers, storage, etc. It is powered via my home solar setup, and actually doesn't cost much to keep online.

However- for a project which compensates me exactly $0.00 USD (No- I still don't take donations). It is NOT worth the additional liability I am taking on.

That liability being- currently trolls/attackers are literally uploading child-porn to lemmy. Thumbnails and content gets synced to this instance. At that point, I am on the hook for this content. This, also goes back to the problem of literally having basically no moderation capabilities either.

Once something is posted, it is sent everywhere.

Here in the US, they like to send no-knock raids out. That is no-bueno.

Project Inefficiencies

One issue I have noticed, every single image/thumbnail, appears to get cached by pictrs. This data is never cleaned up, never purged.... so, it will just keep growing, and growing. The growth, isn't drastic, around 10-30G of new data per week- however, this growth isn't going to be sustainable, especially due to again- this project compensates me nothing. While- hosting 100G of content, isn't going to be a problem. When we start looking 1T, 10T, etc.... That costs money.

Its not as simple as tossing another disk into my cluster. The storage needs redundancy. So, you need multiple disks there.

Then, you need backups. A few more disks here.

Then, we need offsite backups. These cost $/TB stored.

I don't mind hosting putting some resources up front to host something that takes a nominal amount of resources. However- based on my stats, its going to continue to grow forever as there is no purge/timeout/lifespan attached to these objects.

I don't enjoy lemmy enough to want to put up with the above headaches.

Lets face it. You have already seen me complain about the general negativity around lemmy.

The quality of content here, just isn't the same. I have posted lots of interesting content to try and get collaboration going. But, it just doesn't happen.

I just don't see nearly as much interesting content, as I want to interact with.

Summary-

I get no benefit from hosting lemmy online. It was a fun side project for a while. I refuse to attempt to monetize it as well.

As such, since I don't enjoy it, and the process of keeping on top of the latest attacks for the week is time consuming, and boresome, The plan is simple.

The servers will go offline 2023-09-04.

If you wish to migrate your account to another instance-

Here is a tool recently released.

https://github.com/gusVLZ/lemmy_handshake

Pictrs disabled

A heads up....

Since, attackers/etc are now uploading CSAM (child porn....) to lemmy, which gets federated to other instances....

Because I really don't want any reason for the feds to come knocking on my door, as of this time, pictrs is now disabled.

This means.... if you try to post an image, it will fail. As well, you will notice other issues potentially.

Driver for this: https://lemmyonline.com/post/454050

This- is a hobby for me. Given the complete and utter lack of moderation tools to help me properly filter content, the nuclear approach is the only approach here.

CloudNordic said a ransomware attack destroyed customer data on its servers, including primary and secondary backups.

> Both CloudNordic and Azero said that they were working to rebuild customers’ web and email systems from scratch, albeit without their data.

Yea.... Don't bother. But, do expect to hear from my lawyers.....

> CloudNordic said that it “had no knowledge that there was an infection.” > CloudNordic and Azero are owned by Denmark-registered Certiqa Holding, which also owns Netquest, a provider of threat intelligence for telcos and governments.

Edit-

https://www.cloudnordic.com/

Garage54: We make a car from logs in the forest in just 24 hours

YouTube Video

Click to view this content.

Negativity on Lemmy

I am just wondering... is it me- or is there a LOT of just general negativity here.

Every other post I see is...

- America is bad.

- Capitalism is bad. Socialism/Communism is good.

- If you don't like communism, you are a fascist nazi.

Honestly, it's kind of killing my mood with Lemmy. There are a few decent communities/subs here, but, the quality of content appears to be falling.

I mean, FFS. It can't just be me that is noticing this. It honestly feels like I am supporting a communist platform here.

I am on social media to post and read about things related to technology, automation, race cars, etc.

Every other technology post, is somebody bashing on Elon Musk (actually- that is deserved), or talking about Reddit (Let it go. Seriously. We are here, it is there).

On my hobby of liking racecars, I guess, half of the people on lemmy feel it is OK to vandalize a car for being too big.... and car hate culture is pretty big.

All of this is really turning off my mood regarding lemmy.

Cars, Computer, and Code.

My adventures in building out a ceph cluster for proxmox storage.

As a random note, my particular instance (lemmyonline.com) is hosted on that particular ceph cluster.

Engineering Explained: Toyota Developed A Liquid Hydrogen Combustion Engine!

YouTube Video

Click to view this content.

How To Blow Up a 1,300 Horsepower Supra in One Day...

YouTube Video

Click to view this content.

Well... That didn't last long...

FIRST DRIVE and Dyno of Leroy Jr.!!! We Might've Made It Too Fast... (Supercharger is SCREAMIN)

YouTube Video

Click to view this content.

I might have a problem......

I can't say for sure- but, there is a good chance I might have a problem.

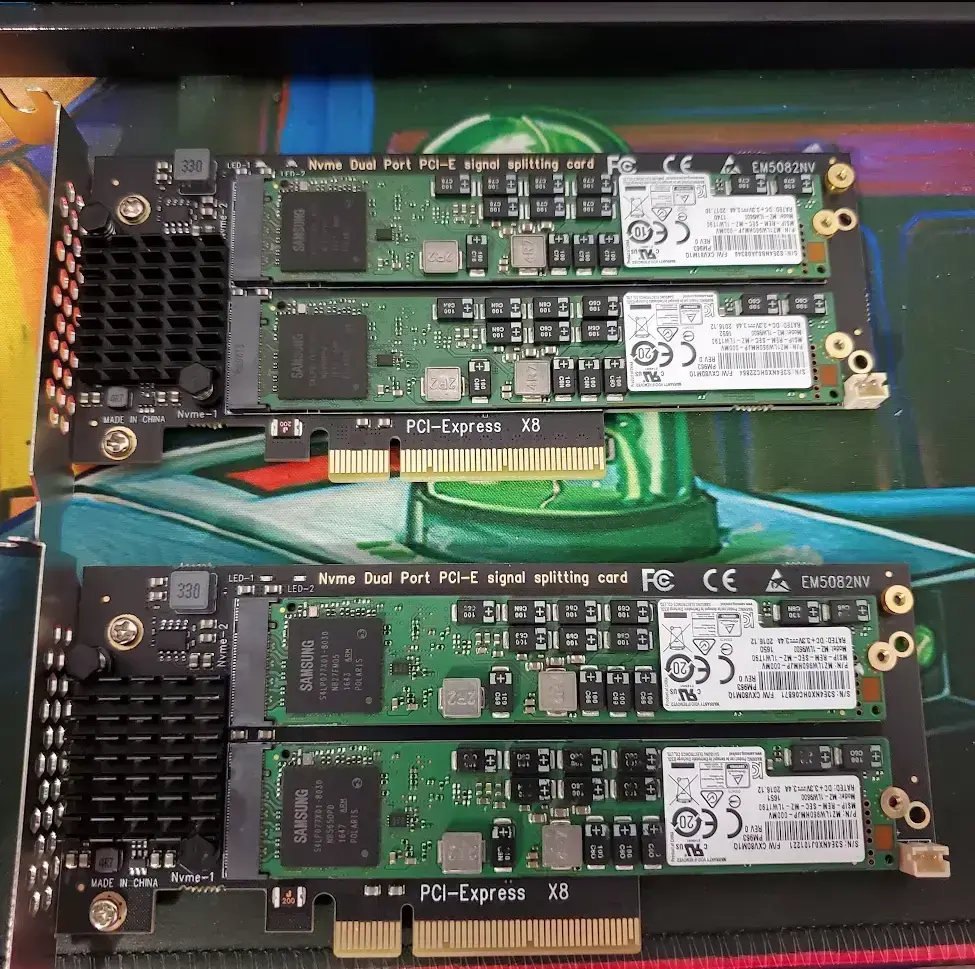

The main picture attached to this post, is a pair of dual bifurcation cards, each with a pair of Samsung PM963 1T enterprise NVMes.

It is going into my r730XD. Which... is getting pretty full. This will fill up the last empty PCIe slots.

But, knock on wood, My r730XD supports bifurcation! LOTS of Bifurcation.

As a result, it now has more HDDs, and NVMes then I can count.

What's the problem you ask? Well. That is just one of the many servers I have laying around here, all completely filled with NVMe and SATA SSDs....

Figured I would share. Seeing a bunch of SSDs is always a pretty sight.

And- as of two hours ago, my particular lemmy instance was migrated to these new NVMes completely transparently too.

LemmyOnline Updated to 0.18.4

Sorry for the ~30 seconds of downtime earlier, however, we are now updated to version 0.18.4.

Base Lemmy Changes:

https://github.com/LemmyNet/lemmy/compare/0.18.3...0.18.4

Lemmy UI Changes:

https://github.com/LemmyNet/lemmy-ui/compare/0.18.3...0.18.4

Official patch notes: https://join-lemmy.org/news/2023-08-08_-_Lemmy_Release_v0.18.4

Lemmy

- Fix fetch instance software version from nodeinfo (#3772)

- Correct logic to meet join-lemmy requirement, don’t have closed signups. Allows Open and Applications. (#3761)

- Fix ordering when doing a comment_parent type list_comments (#3823)

Lemmy-UI

- Mark post as read when clicking “Expand here” on the preview image on the post listing page (#1600) (#1978)

- Update translation submodule (#2023)

- Fix comment insertion from context views. Fixes #2030 (#2031)

- Fix password autocomplete (#2033)

- Fix suggested title " " spaces (#2037)

- Expanded the RegEx to check if the title contains new line caracters. Should fix issue #1962 (#1965)

- ES-Lint tweak (#2001)

- Upgrading deps, running prettier. (#1987)

- Fix document title of admin settings being overwritten by tagline and emoji forms (#2003)

- Use proper modifier key in markdown text input on macOS (#1995)

Experiments in Ceph (with Promox)

So, last month, my kubernetes cluster decided to literally eat shit while I was out on a work conference.

When I returned, I decided to try something a tad different, by rolling out proxmox to all of my servers.

Well, I am a huge fan of hyper-converged, and clustered architectures for my home network / lab, so, I decided to give ceph another try.

I have previously used it in the past with relative success with Kubernetes (via rook/ceph), and currently leverage longhorn.

Cluster Details

- Kube01 - Optiplex SFF

- i7-8700 / 32G DDR4

- 1T Samsung 980 NVMe

- 128G KIOXIA NVMe (Boot disk)

- 512G Sata SSD

- 10G via ConnectX-3

- Kube02 - R730XD

- 2x E5-2697a v4 (32c / 64t)

- 256G DDR4

- 128T of spinning disk.

- 2x 1T 970 evo

- 2x 1T 970 evo plus

- A few more NVMes, and Sata

- Nvidia Tesla P4 GPU.

- 2x Google Coral TPU

- 10G intel networking

- Kube05 - HP z240

- i5-6500 / 28G ram

- 2T Samsung 970 Evo plus NVMe

- 512G Samsung boot NVMe

- 10G via ConnectX-3

- Kube06 - Optiplex Micro

- i7-6700 / 16G DDR4

- Liteon 256G Sata SSD (boot)

- 1T Samsung 980

Attempt number one.

I installed and configured ceph, using Kube01, and Kube05.

I used a mixture of 5x 970 evo / 970 evo plus / 980 NVMe drives, and expected it to work pretty decently.

It didn't. The IO was so bad, it was causing my servers to crash.

I ended up removing ceph, and using LVM / ZFS for the time being.

Here are some benchmarks I found online:

https://docs.google.com/spreadsheets/d/1E9-eXjzsKboiCCX-0u0r5fAjjufLKayaut_FOPxYZjc/edit#gid=0

https://www.proxmox.com/images/download/pve/docs/Proxmox-VE_Ceph-Benchmark-202009-rev2.pdf

The TLDR; after lots of research- Don't use consumer SSDs. Only use enterprise SSDs.

Attempt / Experiment Number 2.

I ended up ordering 5x 1T Samsung PM863a enterprise sata drives.

After, reinstalling ceph, I put three of the drives into kube05, and one more into kube01 (no ports / power for adding more then a single sata disk...).

And- put the cluster together. At first, performance wasn't great.... (but, was still 10x the performance of the first attempt!). But, after updating the crush map to set the failure domain to OSD rather then host, performance picked up quite dramatically.

This- is due to the current imbalance of storage/host. Kube05 has 3T of drives, Kube01 has 1T. No storage elsewhere.

BUT.... since this was a very successful test, and it was able to deliver enough IOPs to run my I/O heavy kubernetes workloads.... I decided to take it up another step.

A few notes-

Can you guess which drive is the samsung 980 EVO, and which drives are enterprise SATA SSDs? (look at the latency column)

Future - Attempt #3

The next goal, is to properly distribute OSDs.

Since, I am maxed out on the number of 2.5" SATA drives I can deploy... I picked up some NVMe.

5x 1T Samsung PM963 M.2 NVMe.

I picked up a pair of dual-spot half-height bifurcation cards for Kube02. This will allow me to place 4 of these into it, with dedicated bandwidth to the CPU.

The remaining one, will be placed inside of Kube01, to replace the 1T samsung 980 NVMe.

This should give me a pretty decent distribution of data, and with all enterprise drives, it should deliver pretty acceptable performance.

More to come....

Home Assistant 2023.8: Translated services, events, and wildcards!

Sentence triggers can now contain wildcards, a brand new event entity, all services are now translated into your language, generate images with OpenAI's DALL-E using Assist, and more!

Home Assistant 2023.8: Translated services, events, and wildcards!

Sentence triggers can now contain wildcards, a brand new event entity, all services are now translated into your language, generate images with OpenAI's DALL-E using Assist, and more!

Home Assistant 2023.8: Translated services, events, and wildcards!

Sentence triggers can now contain wildcards, a brand new event entity, all services are now translated into your language, generate images with OpenAI's DALL-E using Assist, and more!

Youtube / Cleetus: ESP Jet Sprint Day 1 - I Crashed on My FIRST DRIVE... This Boat is INSANELY Fast!!!

YouTube Video

Click to view this content.