Search

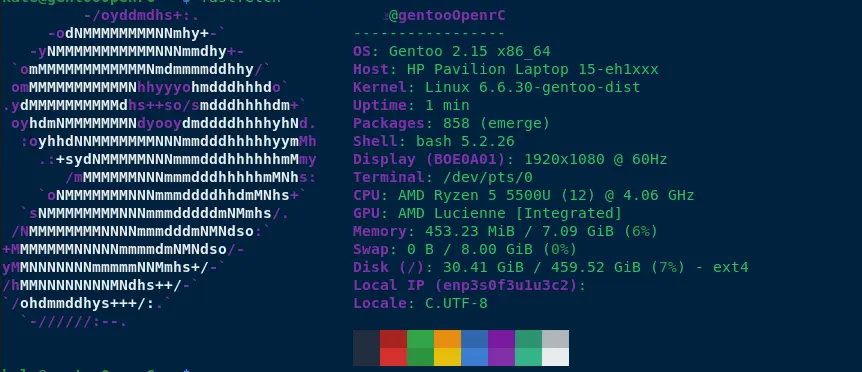

Port Fowarding minecraft server hardening question (gentoo)

So i've been hosting a modded Minecraft server for my friends and me on weekends. While it's been a blast, I've noticed that our current setup using LAN has its limitations. My friends have been eagerly waiting for their next "fix" (i.e., when they can get back online), and I've been replying with a consistent answer: this Friday.

However, exploring cloud providers to spin up a replica of my beloved "Dog Town" Server was a costly endeavor, at least for a setup that's close to my current configuration. As a result, I've turned my attention to self-hosting a Minecraft server on my local network and configuring port forwarding.

To harden my server, I've implemented the following measures:

- Added ufw (Uncomplicated Firewall) for enhanced security.

- Blocked all SSH connections except for the IP addresses of my main PC and LAN rig.

- Enabled SSH public key authentication only.

- Rebuilt all packages using a hardened GCC compiler.

- Disabled root access via

/etc/passwd. - Created two users: one with sudo privileges, allowing full access; the other with limited permissions to run a specific script (

./run.sh) for starting the server.

Additionally, I've set up a fcron job (a job scheduler) as disabled root, which synchronizes my Minecraft server with four folders at the following intervals: 1 hour, 30 minutes, 10 minutes, and 1 day. This ensures that any mods we use are properly synced in case of issues.

any suggestions of making the computer any more secure, aswell as backup solutions? thanks!

--added note, what hostnames do you guys call your servers? I used my favorite band albums and singles for hostnames.

LDAP to UNIX user proxy

Is there any service that will speak LDAP but just respond with the local UNIX users?

Right now I have good management for local UNIX users but every service wants to do its own auth. This means that it is a pain of remembering different passwords, configuring passwords on setting up a new service and whatnot.

I noticed that a lot of services support LDAP auth, but I don't want to make my UNIX user accounts depend on LDAP for simplicity. So I was wondering if there was some sort of shim that will talk the LDAP protocol but just do authentication against the regular user database (PAM).

The closest I have seen is the services.openldap.declarativeContents NixOS option which I can probably use by transforming my regular UNIX settings into an LDAP config at build time, but I was wondering if there was anything simpler.

(Related note: I really wish that services would let you specify the user via HTTP header, then I could just manage auth at the reverse-proxy without worrying about bugs in the service)

Self-hosted Flatpak Repositories

Hi there self-hosted community.

I hope it's not out of line to cross post this type of question, but I thought that people here might also have some unique advice on this topic. I'm not sure if cross posting immediately after the first post is against lemmy-ediquet or not.

cross-posted from: https://lemmy.zip/post/22291879

> I was curious if anyone has any advice on the following: > > I have a home server that is always accessed by my main computer for various reasons. I would love to make it so that my locally hosted Gitea could run actions to build local forks of certain applications, and then, on success, trigger Flatpak to build my local fork(s) of certain programs once a month and host those applications (for local use only) on my home server for other computers on my home network to install. I'm thinking mostly like development branches of certain applications, experimental applications, and miscellaneous GUI applications that I've made but infrequently update and want a runnable instance available in case I redo it. > > Anybody have any advice or ideas on how to achieve this? Is there a way to make a flatpak repository via a docker image that tries to build certain flatpak repositories on request via a local network? Additionally, if that isn't a known thing, does anyone have any experience hosting flatpak repositories on a local-network server? Or is there a good reason to not do this?

Having difficulty visiting an mTLS-authenticated website from GrapheneOS

I host a website that uses mTLS for authentication. I created a client cert and installed it in Firefox on Linux, and when I visit the site for the first time, Firefox asks me to choose my cert and then I'm able to visit the site (and every subsequent visit to the site is successful without having to select the cert each time). This is all good.

But when I install that client cert into GrapheneOS (settings -> encryption & credentials -> install a certificate -> vpn & app user certificate), no browser app seems to recognize that it exists at all. Visiting the website from Vanadium, Fennec, or Mull browsers all return "ERR_BAD_SSL_CLIENT_AUTH_CERT" errors.

Does anyone have experience successfully using an mTLS cert in GrapheneOS?

Uptime monitoring in Windows

> Disclaimer: This is for folks who are running services on Windows machines and does not have more than one device. I am neither an expert at self hosting nor PowerShell. I curated most of this code by doing a lot of "Google-ing" and testing over the years. Feel free to correct any mistakes I have in the code.

Background

TLDR: Windows user needs an uptime monitoring solution

Whenever I searched for uptime monitoring apps, most of the ones that showed up were either hosted on Linux or containers and all I wanted was a a simple exe installation file for some app that will send me alerts when a service or the computer was down. Unfortunately, I couldn't find anything. If you know one, feel free to recommend them.

To get uptime monitoring on Windows, I had to turn to scripting along with a hosted solution (because you shouldn't host the monitoring service on the same device as where your apps are running in case the machine goes down). I searched and tested a lot of code to finally end up with the following.

Now, I have services running on both Windows and Linux and I use Uptime Kuma and the following code for monitoring. But, for people who are still on Windows and haven't made the jump to Linux/containers, you could use these scripts to monitor your services with the same device.

Solution

TLDR: A PowerShell script would check the services/processes/URLs/ports and ping the hosted solution to send out notification.

What I came up with is a PowerShell script that would run every 5 minutes (your preference) using Windows Task Scheduler to check if a Service/Process/URL/Port is up or down and send a ping to Healthchecks.io accordingly.

Prereqs

-

Sign up on healthchecks.io and create a project

-

Add integration to your favorite notification method (There are several options; I use Telegram)

-

Add a Check on Healthchecks.io for each of the service you want to monitor. Ex:

Radarr, Bazarr, JellyfinWhen creating the check, make sure to remember the Slug you used (custom or autogenerated) for that service.

-

Install latest version of PowerShell 7

-

Create a PowerShell file in your desired location. Ex:

healthcheck.ps1in the C drive -

Go to project settings on Healthchecks.io, get the

Ping key, and assign it to a variable in the scriptEx:

$HC= "https://hc-ping.com/<YOUR_PING_KEY>/"The Ping key is used for pinging Healthchecks.io based on the status of the service.

Code

- There are two ways you can write the code: Either check one service or loop through a list.

Port

- To monitor a list of ports, we need to add them to the

Services.csvfile. > The names of the services need to match the Slug you created earlier because, Healthchecks.io uses that to figure out which Check to ping.

Ex:

"Service", "Port" "qbittorrent", "5656" "radarr", "7878" "sonarr", "8989" "prowlarr", "9696"

- Then copy the following code to

healthcheck.ps1:

Import-CSV C:\Services.csv | foreach{ Write-Output "" Write-Output $($_.Service) Write-Output "------------------------" $RESPONSE = Test-Connection localhost -TcpPort $($_.Port) if ($RESPONSE -eq "True") { Write-Host "$($_.Service) is running" curl $HC$($_.Service) } else { Write-Host "$($_.Service) is not running" curl $HC$($_.Service)/fail } }

> The script looks through the Services.csv file (Line 1) and check if each of those ports are listening ($($_.Port) on Line 5) and pings Healthchecks.io (Line 8 or 11) based on their status with their appropriate name ($($_.Service)). If the port is not listening, it will ping the URL with a trailing /fail (Line 11) to indicate it is down.

Service

-

The following code is to check if a service is running.

You can add more services on line 1 in comma separated values. Ex:

@("bazarr","flaresolverr")This also needs to match the Slug.

$SERVICES = @("bazarr") foreach($SERVICE in $SERVICES) { Write-Output "" Write-Output $SERVICE Write-Output "------------------------" $RESPONSE = Get-Service $SERVICE | Select-Object Status if ($RESPONSE.Status -eq "Running") { Write-Host "$SERVICE is running" curl $HC$SERVICE } else { Write-Host "$SERVICE is not running" curl $HC$SERVICE/fail } }

> The script looks through the list of services (Line 1) and check if each of those are running (Line 6) and pings Healthchecks.io based on their status.

Process

-

The following code is to check if a process is running.

Line 1 needs to match their Slug

$PROCESSES = @("tautulli","jellyfin") foreach($PROCESS in $PROCESSES) { Write-Output "" Write-Output $PROCESS Write-Output "------------------------" $RESPONSE = Get-Process -Name $PROCESS -ErrorAction SilentlyContinue if ($RESPONSE -eq $null) { # Write-Host "$PROCESS is not running" curl $HC$PROCESS/fail } else { # Write-Host "$PROCESS is running" curl $HC$PROCESS } }

URL

-

This can be used to check if a URL is responding.

Line 1 needs to match the Slug

$WEBSVC = "google" $GOOGLE = "https://google.com" Write-Output "" Write-Output $WEBSVC Write-Output "------------------------" $RESPONSE = Invoke-WebRequest -URI $GOOGLE -SkipCertificateCheck if ($RESPONSE.StatusCode -eq 200) { # Write-Host "$WEBSVC is running" curl $HC$WEBSVC } else { # Write-Host "$WEBSVC is not running" curl $HC$WEBSVC/fail }

Ping other machines

-

If you have more than one machine and you want to check their status with the Windows host, you can check it by pinging them

-

Here also I use a CSV file to list the machines. Make sure the server names matches their Slug

Ex:

"Server", "IP" "server2", "192.168.0.202" "server3", "192.168.0.203"

Import-CSV C:\Servers.csv | foreach{ Write-Output "" Write-Output $($_.Server) Write-Output "------------------------" $RESPONSE = Test-Connection $($_.IP) -Count 1 | Select-Object Status if ($RESPONSE.Status -eq "Success") { # Write-Host "$($_.Server) is running" curl $HC$($_.Server) } else { # Write-Host "$($_.Server) is not running" curl $HC$($_.Server)/fail } }

Task Scheduler

For the script to execute in intervals, you need to create a scheduled task.

- Open Task Scheduler, navigate to the Library, and click on

Create Taskon the right - Give it a name. Ex:

Healthcheck- Choose

Run whether user is logged on or not - Choose

Hiddenif needed

- Choose

- On Triggers tab, click on New

- Choose

On a schedule - Choose

One timeand select an older date than your current date - Select

Repeat task everyand choose the desired time and duration. Ex: 5 minutes indefinitely - Select

Enabled

- Choose

- On Actions tab, click on New

- Choose

Start a program - Add the path to PowerShell 7 in Program:

"C:\Program Files\PowerShell\7\pwsh.exe" - Point to the script in arguments:

-windowstyle hidden -NoProfile -NoLogo -NonInteractive -ExecutionPolicy Bypass -File C:\healthcheck.ps1

- Choose

- Rest of the tabs, you can choose whatever is appropriate for you.

- Hit Ok/Apply and exit

Notification Method

Depending on the integration you chose, set it up using the Healthchecks docs.

I am using Telegram with the following configuration:

Name: Telegram

Execute on "down" events: POST https://api.telegram.org/bot<ID>/sendMessage

Request Body:

{ "chat_id": "<CHAT ID>", "text": "🔴 $NAME is DOWN", "parse_mode": "HTML", "no_webpage": true }

Request Headers: Content-Type: application/json

Execute on "up" events: POST https://api.telegram.org/bot<ID>/sendMessage

Request Body:

{ "chat_id": "<CHAT ID>", "text": "🟢 $NAME is UP", "parse_mode": "HTML", "no_webpage": true }

Request Headers: Content-Type: application/json

Closing

You can monitor up to 20 services for free. You can also selfhost Healthchecks instance (wouldn't recommend if you only have one machine).

I've been wanting to give something back to the community for a while. I hope this is useful to some of you. Please let me know if you have any questions or suggestions. Thank you for reading!

TV with infared sensor (mac mini)

inspired by this post

I have aac mini with an infared reciever on it. I'd love to use it as a TV PC. And ideally an infared remote too.

I am looking for software recommendations for this, as I've done basically no research.

What's my best option? Linux with kodi? How would a remote connect / which software is required for the remote to work??

Thanks!

Are selfhosted Piped instances still working?

All the public Piped instances are getting blocked by YouTube but do small selfhosted instances, that are only used by a handful of users or just yourself, still working? Thinking of just selfhosting it.

On a side note, if I do it, I'd also like to install the new EFY redesign or is that branch too far behind?

Edit: As you can see in the replies, private instances still work. I also found the instructions for running the new EFY redesign here

git SSH through Tailscale sidecar container?

Hey all!

I posted this to /c/tailscale yesterday and I figured I'd post it here to get some more visibility.

I'm trying to ssh into my tailnet-hosted (through tailscale serve) gogs instance and I can't seem to figure out how. Has anyone tried doing this? Will I need to add a user to the sidecar container and add a shim like they do in the regular gogs setup? I appreciate any insight.

Edit: Added tag and modified title for clarity.

Multiple Kubernetes Services Using Same Port Without SNI

I am running a bare metal Kubernetes cluster on k3s with Kube-VIP and Traefik. This works great for services that use SSL/TLS as Server Name Indication (SNI) can be used to reverse proxy multiple services listening on the same port. Consequently, getting Traefik to route multiple web servers receiving traffic on ports 80 or 443 is not a problem at all. However, I am stuck trying to accomplish the same thing for services that just use TCP or UDP without SSL/TLS since SNI is not included in TCP or UDP traffic.

I tried to setup Forgejo where clients will expect to use commands like git clone [email protected].... which would ultimately use SSH on port 22. Since SSH uses TCP and Traefik supports TCPRoutes, I should be able to route traffic to Forgejo's SSH entry point using port 22, but I ran into an issue where the SSH service on the node would receive/process all traffic received by the node instead of allowing Traefik to receive the traffic and route it. I believe that I should be able to change the port that the node's SSH service is listening on or restrict the IP address that the node's SSH service is listening on. This should allow Traefik to receive the traffic on port 22 and route that traffic to Forgejo's SSH entry point while also allowing me to SSH directly into the node.

However, even if I get that to work correctly, I will run into another issue when other services that typically run on port 22 are stood up. For example, I would not be able to have Traefik reverse proxy both Forgejo's SSH entry point and an SFTP's entry point on port 22 since Traefik would only be able to route all traffic on port 22 to just one service due to the lack of SNI details.

The only viable solution that I can find is to only run one service's entry point on port 22 and run each of the other services' entry points on various ports. For instance, Forgejo's SSH entry point could be port 22 and the SFTP's entry point could be port 2222 (mapped to the pod's port 22). This would require multiple additional ports be opened on the firewall and each client would need its configuration and/or commands modified to connect to the service's a non-standard port.

Another solution that I have seen is to use other services like stunnel to wrap traffic in TLS (similar to how HTTPS works), but I believe this will likely lead to even more problems than using multiple ports as every client would likely need to be compatible with those wrapper services.

Is there some other solution that I am missing? Is there something that I could do with Virtual IP addresses, multiple load balancer IP addresses, etc.? Maybe I could route traffic on Load_Balancer#1_IP_Address:22 to Forgejo's SSH entry point and Load_Balancer#2_IP_Address:22 to SFTP's entry point?

tl;dr: Is it possible to host multiple services that do not use SSL/TLS (ie: cannot use SNI) on the same port in a single Kubernetes cluster without using non-standard ports and port mapping?

Migrating and Upgrading Proxmox to New SSDs on Poweredge Safely

With the EOL of PVEv7 and my need for more storage space, I've decided to migrate my VMs to a larger set of drives.

I have PVE installed baremetal on a dell r720 RAID1 SSDs. I'm a bit nervous about the migration.

I plan on swapping the SSDs, installing PVE8 from scratch, then restoring VMs from backup.

Should I encounter an issue, am I able to swap the old RAID1 SSDs back in, or once I configure the new ones are the old drives done for? I'm managing RAID on a dell RAID controller.

I also have my data hard drives passed directly into a TrueNAS VM which supplies other VMs via NFS. Is there anything I should be concerned about when I've migrated, such as errors re-passing the data drives to the TrueNAS VM. Or should everything just work again?

Is there a master PVE config file I can download before swapping drives that I can reference when configuring the new PVE install?

Weird (to me) networking issue - can you help?

I have two subnets and am experiencing some pretty weird (to me) behaviour - could you help me understand what's going on?

----

Scenario 1

PC: 192.168.11.101/24 Server: 192.168.10.102/24, 192.168.11.102/24

From my PC I can connect to .11.102, but not to .10.102:

bash ping -c 10 192.168.11.102 # works fine ping -c 10 192.168.10.102 # 100% packet loss

----

Scenario 2

Now, if I disable .11.102 on the server (ip link set <dev> down) so that it only has an ip on the .10 subnet, the previously failing ping works fine.

PC: 192.168.11.101/24 Server: 192.168.10.102/24

From my PC:

bash ping -c 10 192.168.10.102 # now works fine

This is baffling to me... any idea why it might be?

----

Here's some additional information:

-

The two subnets are on different vlans (.10/24 is untagged and .11/24 is tagged 11).

-

The PC and Server are connected to the same managed switch, which however does nothing "strange" (it just leaves tags as they are on all ports).

-

The router is connected to the aformentioned switch and set to forward packets between the two subnets (I'm pretty sure how I've configured it so, plus IIUC the second scenario ping wouldn't work without forwarding).

-

The router also has the same vlan setup, and I can ping both .10.1 and .11.1 with no issue in both scenarios 1 and 2.

-

In case it may matter, machine 1 has the following routes, setup by networkmanager from dhcp:

default via 192.168.11.1 dev eth1 proto dhcp src 192.168.11.101 metric 410 192.168.11.0/24 dev eth1 proto kernel scope link src 192.168.11.101 metric 410

- In case it may matter, Machine 2 uses systemd-networkd and the routes generated from DHCP are slightly different (after dropping the .11.102 address for scenario 2, of course the relevant routes disappear):

default via 192.168.10.1 dev eth0 proto dhcp src 192.168.10.102 metric 100 192.168.10.0/24 dev eth0 proto kernel scope link src 192.168.10.102 metric 100 192.168.10.1 dev eth0 proto dhcp scope link src 192.168.10.102 metric 100 default via 192.168.11.1 dev eth1 proto dhcp src 192.168.11.102 metric 101 192.168.11.0/24 dev eth1 proto kernel scope link src 192.168.11.102 metric 101 192.168.11.1 dev eth1 proto dhcp scope link src 192.168.11.102 metric 101

----

solution

(please do comment if something here is wrong or needs clarifications - hopefully someone will find this discussion in the future and find it useful)

In scenario 1, packets from the PC to the server are routed through .11.1.

Since the server also has an .11/24 address, packets from the server to the PC (including replies) are not routed and instead just sent directly over ethernet.

Since the PC does not expect replies from a different machine that the one it contacted, they are discarded on arrival.

The solution to this (if one still thinks the whole thing is a good idea), is to route traffic originating from the server and directed to .11/24 via the router.

This could be accomplished with ip route del 192.168.11.0/24, which would however break connectivity with .11/24 adresses (similar reason as above: incoming traffic would not be routed but replies would)...

The more general solution (which, IDK, may still have drawbacks?) is to setup a secondary routing table:

bash echo 50 mytable >> /etc/iproute2/rt_tables # this defines the routing table # (see "ip rule" and "ip route show table <table>") ip rule add from 192.168.10/24 iif lo table mytable priority 1 # "iff lo" selects only # packets originating # from the machine itself ip route add default via 192.168.10.1 dev eth0 table mytable # "dev eth0" is the interface # with the .10/24 address, # and might be superfluous

Now, in my mind, that should break connectivity with .10/24 addresses just like ip route del above, but in practice it does not seem to (if I remember I'll come back and explain why after studying some more)

TriliumNext Notes first stable release is now available! 🚀

🎁 Where to get it?

❔ Why TriliumNext?

TriliumNext has started as a fork of Trilium Notes at the beginning of 2024. The reason for the work is that the upstream project has entered maintenance phase and we would like to extend the application. The work so far has focused more on the technical aspects because most of the work has been done by u/zadam and handing over a project of this size is non-trivial. Some more technical work will be done in the upcoming versions after which the project can focus on improving the user experience as much as possible.

Some of the current features are listed below and can be found on the readme:

-

🎄 Notes can be arranged into arbitrarily deep tree. Single note can be placed into multiple places in the tree (see cloning

-

📝 Rich WYSIWYG note editing including e.g. tables, images and math with markdown autoformat

-

✏️ Support for editing notes with source code, including syntax highlighting

-

🔍 Fast and easy navigation between notes, full text search and note hoisting

-

🔢 Seamless note versioning

-

#️⃣ Note attributes can be used for note organization, querying and advanced scripting

-

🔁 Synchronization with self-hosted sync server (there's a 3rd party service for hosting synchronisation server)

-

🌍 Sharing (publishing) notes to public internet

-

🔐 Strong note encryption with per-note granularity

-

🖼️ Sketching diagrams with built-in Excalidraw (note type "canvas")

-

🗺️ Relation maps and link maps for visualizing notes and their relations

-

👨💻 Scripting - see Advanced showcases

-

🤖 REST API for automation

-

⬆️ Scales well in both usability and performance upwards of 100 000 notes

-

📱 Touch optimized mobile frontend for smartphones and tablets

-

✂️ Web Clipper for easy saving of web content

Upcoming Improvements

🔡Support for multiple languages. (Work underway) 🚦Improving the existing theme and decluttering the UI. 📱Mobile improvements. ⌨️Exploring additional editors such as a MarkDown-based editor. 📓Improving existing documentation. (Work underway)

⬆️ Porting from Trilium Notes?

There is no change in the database structure. TriliumNext Notes can be run instead of the original Trilium Notes and it should work out of the box, since it will reuse the same database. It should also be possible to downgrade back to Trilium Notes if required, without any changes or loss of data. Similarly goes for the server, it should work out of the box. It is possible to mix and match between Trilium Notes and TriliumNext Notes.

Do you use Helm Charts? We've got you covered!

🐞 How stable is it?

Generally you should not encounter any breaking bugs as the prior versions have been tested and daily-driven for a few weeks now. Should you encounter any issue, feel free to report them on our GitHub issues.

✨ Key Fixes/Improvements

v0.90.4 (Stable)

Re-introduced ARM builds Docker container marked as not healthy Find/Replace dialog doesn't match theme Tray icon is missing on windows Error when Duplicating sub-tree of note that contains broken internal trilium link Update available points to Trilium download instead of TriliumNext

v0.90.3 (Stable)

Fixed Error importing zip file Fixed Alt+Left and Alt+Right navigation would not work under Electron. Added a fresh new icon to represent our ongoing effort to improve Trilium.

v0.90.2-beta

Fixed some issues with the sync. Ported the server from Common.js to ES modules. Updated the CKEditor from 41.0.0 to 41.4.2. Updated Electron from 25.9.8 (marked as end-of-life) to 31.2.1. Started adding support for internationalization (#248). The application will soon be able to be translated into multiple languages. Improved error management for scripting

v0.90.1-beta

Introduced a Windows installer instead of the .zip installation. Bug fixes related to the TypeScript port of the server.

v0.90.0-beta

```

On a technical side, the server was rewritten in TypeScript.

(This should improve the stability of both current and future developments thanks to the language's type safety. It will also make the development slightly easier.)

````___`

Linkwarden - An open-source collaborative bookmark manager to collect, organize and preserve webpages | August 2024 Update - Added More Translations, Code Refactoring and Optimization and more... 🚀

⚡️⚡️⚡️Self-hosted collaborative bookmark manager to collect, organize, and preserve webpages and articles. - linkwarden/linkwarden

Hello everybody, Daniel here!

We're excited to be back with some new updates that we believe the community will love!

As always before we start, we’d like to express our sincere thanks to all of our Cloud subscription users. Your support is crucial to our growth and allows us to continue improving. Thank you for being such an important part of our journey. 🚀

What's New?

---

🛠️ Code Refactoring and Optimization

The first thing you'll notice here is that Linkwarden is now faster and more efficient.[^1] And also the data now loads a skeleton placeholder while fetching the data instead of saying "you have no links", making the app feel more responsive.

🌐 Added More Translations

Thanks to the collaborators, we've added Chinese and French translations to Linkwarden. If you'd like to help us translate Linkwarden into your language, check out #216.

✅ And more...

Check out the full changelog below.

Full Changelog: https://github.com/linkwarden/linkwarden/compare/v2.6.2...v2.7.0

--------

If you like what we’re doing, you can support the project by either starring ⭐️ the repo to make it more visible to others or by subscribing to the Cloud plan (which helps the project, a lot).

Feedback is always welcome, so feel free to share your thoughts!

Website: https://linkwarden.app

GitHub: https://github.com/linkwarden/linkwarden

Read the blog: https://blog.linkwarden.app/releases/2.7

[^1]: This took a lot more work than it should have since we had to refactor the whole server-side state management to use react-query instead of Zustand.

Follow-up post of my storage question from yesterday: Are there ANY storage extension options on my mainboard?

First of all, thank you so much for your great answers under my post from yesterday! They were really really helpful!

I've now decided that I will not use something with USB. It really doesn't seem to be reliable enough for constant read-write-tasks, and I don't wanna risk any avoidable data loss and headache.

Also, it just doesn't seem to be very future proof. It would be pretty expensive, only for it to get replaced soon, and then getting obsolete. It just seemed like a band-aid solution tbh. So, no USB hard drive bay, no huge external hard drive, and no NAS just for that purpose.

---

A few people asked me about the hardware.

My server is a mini-PC/ thin client I bought used for 50 bucks. I've used it for about two years now, and it had even more years of usage under the belt with its' former owner. Imo, that's a very sustainable solution, that worked pretty well until now.

I used it almost exclusively for Nextcloud (AIO), with all the data being stored in the internal 1 TB SSD.

For those who are interested, here are all the hardware details:

Thing is, I want to get more into selfhosting. For that, my main goal is to a) Replace Nextcloud with individual (better) services, like Immich and Paperless-ngx. NC-AIO was extremely simple to set up and worked pretty fine, but I always found it to be bloated and a bit wonky, and, mainly, the AIO takes up all my network and resources. I just want something better, you understand that for sure :) b) Get more storage. I'm into photography, and all those RAW photos take up SO MUCH SPACE! The internal 1 TB is just not future proof for me. c) Maybe rework my setup, both in software, and maybe in hardware. Originally, I didn't plan to screw everything, but I think it might be better that way. The setup isn't bad at all, but now, as I got more experience, I just want it to be more solid. But I'm not sure about doing that tbh, since it really isn't a lost case.

---

As someone already mentioned in the last post, I really don't have a million bucks to create my own data center. I'm not completely broke, but almost :D Therefore, I just want to make the best out of my already existing hardware if possible.

Because I decided against USB, and because I don't know if there are any slots on the mainboard that can be repurposed for additonal storage, I need your advice if there are any options to achieve that, e.g. via a PCIe slot + adapter, if I had any. I saw one SATA III port, but that one really isn't enough, especially for extendability.

Here are the photos from both the front and back side: ! !

---

My thought was, instead of buying one hella expensive 3+TB SSD drive, just screw it and make something better from scratch.

So, if you guys don't give me a silver bullet solution, aka. "you can use this slot and plug in 4 more drives", I will probably have to build my own "perfect" device, with a great case, silent fans, many storage slots, and more.

Btw, do you have any recommendations for that? (What mainboard, which case, etc.) Preferably stuff that I can buy already used.

Thank you so much!

Adding storage - Best options? (External USB drives, automatic decryption, media, etc.)

I'm planning to upgrade my home server and need some advice on storage options. I already researched quite a bit and heard so many conflicting opinions and tips.

Sadly, even after asking all those questions to GPT and browsing countless forums, I'm really not sure what I should go with, and need some personal recommendations, experience and tips.

What I want:

- More storage: Right now, I only have 1 TB, which is just the internal SSD of my thin client. This amount of storage will not be sufficient for personal data anymore in the near future, and it already isn't for my movies.

- Splitting the data: I want to use the internal drive just for stuff that actively runs, like the host OS, configs and Docker container data. Those are in one single directory and will be backed up manually from time to time. It wouldn't matter that much if they get lost, since I didn't customize a lot and mostly used defaults for everything. The personal data (documents, photos, logs), backups and movies should each get their own partition (or subvolume).

- Encryption at rest: The personal data are right now unencrypted, and I feel very unwell with that. They definitely have to get encrypted at rest, so that somebody with physical access can't just plug it in and see all my sensitive data in plain text. Backups are already encrypted as is. And for the rest, like movies, astrophotography projects (huge files!), and the host, I absolutely don't care.

- Extendability: If I notice one day that my storage gets insufficient, I want to just plug in another drive and extend my current space.

- Redundancy: At least for the most important data, a hard drive failure shouldn't be a mess. I back them up regularly on an external drive (with Borg) and sometimes manually by just copying the files plainly. Right now, the problem is, if the single drive fails, which it might do, it would be very annoying. I wouldn't loose many data, since they all get synced to my devices and I then can just copy them, and I have two offline backups available just in case, but it would still cause quite some headache.

So, here are my questions:

Best option for adding storage

My Mini-PC sadly has no additional ports for more SATA drives. The only option I see is using the 4 USB 3.0 ports on the backside. And there are a few possibilities how I can do that.

- Option 1: just using "classic" external drives. With that, I could add up to 4 drives. One major drawback of that is the price. Disks with more than 1 TB are very expensive, so I would hit my limit with 4 TB if I don't want to spend a fortune. Also, I'm not sure about the energy supply and stability of the connection. If one drive fails, a big portion of my data is lost too. I can also transform them into a RAID setup, which would half my already limited storage space even more, and then the space wouldn't be enough or extendable anymore. And of course, it would just look very janky too...

- Option 2: The same as above, but with USB hubs. That way, I theoretically could add up to 20 drives, when I have a hub with 5 slots. That would of course be a very suboptimal thing, because I highly doubt that the single USB port can handle the power demand and information speed/ integrity with that huge amount of drives. In reality, I of course wouldn't add that many. Maybe only two per hub, and then set them up as RAID. That would make 4x2 drives.

- And, option 3: Buy a specialized hard drive bay, like this simpler one with two slots or this more expensive one for 4 drives and active cooling. With those, I can just plug in up to 4 drives per bay, and then connect those via USB. The drives get their power not from the USB port, but from their own power supply. Also, they get cooled (either passively via the case if I choose one that fits only two drives, or actively with a cooling fan) and there are options to enable different storage modes, for example a built in RAID. That would make the setup quite a bit simpler, but I'm not sure if I would loose control of formatting the drives how I want them to be if they get managed by the bay.

What would you recommend?

File system

File system type

I will probably choose BTRFS if that is possible. I thought about ZFS too, but since it isn't included by default, and BTRFS does everything I want, I will probably go with BTRFS. It would give me the option for subvolumes, some of which are encrypted, compression, deduplication, RAID or merged drives, and seems to be future proof without any disadvantages. My host OS (Debian) is installed with Ext4, because it came like that by default, and is fine for me. But for storage, something else than Ext4 seems to be the superior choice.

Encryption

Encrypting drives with LUKS is relatively straight forward. Are there simple ways to do that, other than via CLI? Do Cockpit, CasaOS or other web interface tools support that? Something similar to Gnomes' Disk Utility for example, where setting that up is just a few clicks.

How can I unlock the drives automatically when certain conditions are met, e.g. when the server is connected to the home network, or by adding a TPM chip onto the mainboard? Unlocking the volume every time the server reboots would be very annoying.

That of course would compromize the security aspect quite a bit, but it doesn't have to be super secure. Just secure enough, that if a malicious actor (e.g. angry Ex-GF, police raid, someone breaking in, etc.) can't see all my photos by just plugging the drive in. For my threat model, everything that takes more than 15 minutes of guessing unlock options is more than enough. I could even choose "Password123" as password, and that would be fine.

I just want the files to be accessible after unlocking, so the "Encrypt after upload"-option that Nextcloud has or Cryptomator for example isn't an option.

RAID?

From what I've read, RAID is a quite controversial topic. Some people say it's not necessary, and some say that one should never live without. I know that it is NOT a backup solution and does not replace proper 3-2-1-backups.

Thing is, I can't assess how often drives fail, and I would loose half of my available storage, which is limited, especially by $$$. For now, I would only add 1 or max 2 TB, and then upgrade later when I really need it. And for that, having to pay 150€ or 400€ is a huge difference.

"PSA: Update Vaultwarden as soon as possible"

See this post from another website for more context.

Important: Make a backup first, at least one user mentioned the update breaking their install

> A new version (1.32.0) of Vaultwarden is out with security fixes: > > >This release has several CVE Reports fixed and we recommend everybody to update to the latest version as soon as possible. > > >CVE-2024-39924 Fixed via #4715 > > >CVE-2024-39925 Fixed via #4837 > > >CVE-2024-39926 Fixed via #4737 > > > Release page

Self-Hosted AI is pretty darn cool

I don't consider myself very technical. I've never taken a computer science course and don't know python. I've learned some things like Linux, the command line, docker and networking/pfSense because I value my privacy. My point is that anyone can do this, even if you aren't technical.

I tried both LM Studio and Ollama. I prefer Ollama. Then you download models and use them to have your own private, personal GPT. I access it both on my local machine through the command line but I also installed Open WebUI in a docker container so I can access it on any device on my local network (I don't expose services to the internet).

Having a private ai/gpt is pretty cool. You can download and test new models. And it is private. Yes, there are ethical concerns about how the model got the training. I'm not minimizing those concerns. But if you want your own AI/GPT assistant, give it a try. I set it up in a couple of hours, and as I said... I'm not even that technical.

What app+hardware package is most comparable to iCloud Photos in regard to speed and features?

Is anyone self-hosting a genuinely snappy and robust media hosting service for themselves? What's your setup look like?

The best thing about Apple's Photos on my iDevices is the speed at which everything loads. Even videos (usually) load reasonably fast over LTE. The user interface is decent enough and has a high percentage of features I'd like to have on the go. The on-device AI is awesome (recognizing / organizing faces and objects and locations).

I'd like to get away from iCloud for numerous reasons: the subscription, the chance the UX gets worse, privacy, ease of data ownership and organization, OS independence, etc.

I currently have a QNAP TS-253A with 8GB RAM, Celeron N3160 1.6GHz 4 core, (2) Seagate IronWolf 8TB ST8000VN0022 at about 98% capacity, Raid 1 . I mostly use it for streaming music and videos at home but I also stream music outside the house without issue. Movies don't stream at HD immediately but once they cache up they're good within a minute.

Some people have suggested this hardware should be sufficient. I feel like it's archaic. What do you think?

I've tried Immich but find it to be slow and very limited with features. I've even tested hosting it on Elestio but that didn't go too well. I'm not opposed to paying for offsite services but at that point it just seems like I should stick with iCloud.

I already have Plex running on my NAS so I use that for archiving but it's way too slow to use for looking at pictures, even locally. QNAP has the photo app QuMagie with facial recognition and it seems alright but it's agonizingly slow, if it works at all.

All of the self-hosted apps, in my experience, are well outside the scope of iCloud Photos' speed and feature set. If I could even just test one that matched its speed, I could better assess whatever features they have.

What I'm not sure of is if I'm hitting a wall based on the apps, my hardware, or even my ISP (Speedtest reports upload: 250mpbs). The fact that apps like Plex and QuMagie suck even locally suggests to me it's not an ISP issue (yet).

My NAS is already at capacity so it's time for an upgrade of some sort. While I'm in the mindset, I wanted to see if there's a better product I could use for hosting. My space and finances are not without limits but I'm open to ideas.

I realize I'm not a multi billion dollar company with data centers around the world but I feel like I should be able to piece something together that's relatively comparable for less than an arm and a leg. Am I wrong?

DAS filepath autoincrements up each time I reboot

I have a Qnap DAS. It is set up in a raid5 configuration. The problem is that each time I reboot my machine (ubuntu 24.04 LTS), the path of the DAS will auto-increment up by one.

For example the path will automatically go from media/raid57/medialib to media/raid58/medialib. That means I need to manually redo all file paths and then re-scan my entire media library for Jellyfin, each time I reboot my machine (which is like 2-3 times a month).

It is getting pretty annoying and I'm wondering if someone knows why this happens and what I can do to fix it.

This happens after 3-4 days of running the server, then I have to restart it manually.

I bought an Optiplex 5040, with an i5-6500TE, and 8 GB DDR3L RAM.

When I bought it, I installed Fedora Server on it. It got stuck every few days but I could never see the error. The services just stopped working, I couldn't ssh into it, and connecting it to a monitor showed a black screen.

So, I thought let's install Ubuntu Server, maybe Fedora isn't compatible with all of its hardware. The same thing is happening, now, but I can see this error. Even when there's nothing installed on it, no containers, nothing other than base packages, this happens.

I have updated the bios. I have tried setting nouveau.modeset=0 in the grub config file. I have tried disabling and enabling c-states. No luck till now.

Would really appreciate if anyone helps me with this.

UPDATE:

- I cleaned everything and reapplied the thermal paste. I did not see any change in the thermals. It never goes over 55°C even under full load.

- I reset the motherboard by removing that jumper thing.

- I ran

memtest86, which took over 2½ hours. It did not show any errors. - I ran a CPU stress test for over 15 hours, and nothing crashed.

- I also ran the Dell's diagnostic tool, available in the boot menu of the motherboard. The whole test took over 2 hours but did not show any errors. It tested the memory, CPU, fans, storage drives, etc.

You can use Subtify with Navidrome to import your playlists. It matches your Spotify playlists with your local music.

Same, though I'm using acme.sh and DNS-01. (had to go look at the script that triggers it to remember, lol)

I check the log file my update script writes every few months just to be sure nothings screwy, but I've had 0 issues in 7 years of using LE now.

A paid cert isn't worth it.

You're right, Google released their vision in 2023, here is what it says regarding lifespan:

a reduction of TLS server authentication subscriber certificate maximum validity from 398 days to 90 days. Reducing certificate lifetime encourages automation and the adoption of practices that will drive the ecosystem away from baroque, time-consuming, and error-prone issuance processes. These changes will allow for faster adoption of emerging security capabilities and best practices, and promote the agility required to transition the ecosystem to quantum-resistant algorithms quickly. Decreasing certificate lifetime will also reduce ecosystem reliance on “broken” revocation checking solutions that cannot fail-closed and, in turn, offer incomplete protection. Additionally, shorter-lived certificates will decrease the impact of unexpected Certificate Transparency Log disqualifications.

I've set it up fully automated with traefik and dns challenges.

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I've seen in this thread:

| Fewer Letters | More Letters |

|---|---|

| CA | (SSL) Certificate Authority |

| CF | CloudFlare |

| DNS | Domain Name Service/System |

| HTTP | Hypertext Transfer Protocol, the Web |

| IP | Internet Protocol |

| SSL | Secure Sockets Layer, for transparent encryption |

| TLS | Transport Layer Security, supersedes SSL |

| nginx | Popular HTTP server |

[Thread #969 for this sub, first seen 12th Sep 2024, 15:05] [FAQ] [Full list] [Contact] [Source code]

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I've seen in this thread:

| Fewer Letters | More Letters |

|---|---|

| CGNAT | Carrier-Grade NAT |

| DHCP | Dynamic Host Configuration Protocol, automates assignment of IPs when connecting to a network |

| DNS | Domain Name Service/System |

| IP | Internet Protocol |

| NAT | Network Address Translation |

| Plex | Brand of media server package |

| SSL | Secure Sockets Layer, for transparent encryption |

| TLS | Transport Layer Security, supersedes SSL |

| VPN | Virtual Private Network |

| VPS | Virtual Private Server (opposed to shared hosting) |

8 acronyms in this thread; the most compressed thread commented on today has 7 acronyms.

[Thread #968 for this sub, first seen 12th Sep 2024, 08:55] [FAQ] [Full list] [Contact] [Source code]

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I've seen in this thread:

| Fewer Letters | More Letters |

|---|---|

| DNS | Domain Name Service/System |

| HTTP | Hypertext Transfer Protocol, the Web |

| IP | Internet Protocol |

| PiHole | Network-wide ad-blocker (DNS sinkhole) |

| VPN | Virtual Private Network |

| nginx | Popular HTTP server |

[Thread #967 for this sub, first seen 11th Sep 2024, 19:25] [FAQ] [Full list] [Contact] [Source code]

The steps below are high level, but should provide an outline of how to accomplish what you're asking for without having to associate your IP address to any domains nor publicly exposing your reverse proxy and the services behind the reverse proxy. I assume since you're running Proxmox that you already have all necessary hardware and would be capable of completing each of the steps. There are more thorough guides available online for most of the steps if you get stuck on any of them.

- Purchase a domain name from a domain name registrar

- Configure the domain to use a DNS provider (eg: Cloudflare, Duck DNS, GoDaddy, Hetzner, DigitalOcean, etc.) that supports wild card domain challenges

- Use NginxProxyManager, Traefik, or some other reverse proxy that supports automatic certificate renewals and wildcard certificates

- Configure both the DNS provider and the reverse proxy to use the wildcard domain challenge

- Setup a local DNS server (eg: PiHole, AdGuardHome, Blocky, etc.) and configure your firewall/router to use the DNS server as your DNS resolver

- Configure your reverse proxy to serve your services via domains with a subdomain (eg: service1.domain.com, service2.domain.com, etc.) and turn on http (port 80) to https (port 443) redirects as necessary

- Configure your DNS server to point your services' subdomains to the IP address of your reverse proxy

- Access to your services from anywhere on your network using the domain name and https when applicable

- (Optional) Setup a VPN (eg: OpenVPN, WireGuard, Tailscale, Netbird, etc.) within your network and connect your devices to your VPN whenever you are away from your network so you can still securely access your services remotely without directly exposing any of the services to the internet

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I've seen in this thread:

| Fewer Letters | More Letters |

|---|---|

| DNS | Domain Name Service/System |

| Git | Popular version control system, primarily for code |

| HTTP | Hypertext Transfer Protocol, the Web |

| HTTPS | HTTP over SSL |

| IP | Internet Protocol |

| SSL | Secure Sockets Layer, for transparent encryption |

| VPN | Virtual Private Network |

| nginx | Popular HTTP server |

[Thread #966 for this sub, first seen 11th Sep 2024, 17:45] [FAQ] [Full list] [Contact] [Source code]

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I've seen in this thread:

| Fewer Letters | More Letters |

|---|---|

| IP | Internet Protocol |

| NFS | Network File System, a Unix-based file-sharing protocol known for performance and efficiency |

| SSD | Solid State Drive mass storage |

[Thread #965 for this sub, first seen 11th Sep 2024, 13:45] [FAQ] [Full list] [Contact] [Source code]

I like the [Max Quality] option. Much fancy, very wow.

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I've seen in this thread:

| Fewer Letters | More Letters |

|---|---|

| HTTP | Hypertext Transfer Protocol, the Web |

| LXC | Linux Containers |

| nginx | Popular HTTP server |

[Thread #964 for this sub, first seen 10th Sep 2024, 23:45] [FAQ] [Full list] [Contact] [Source code]

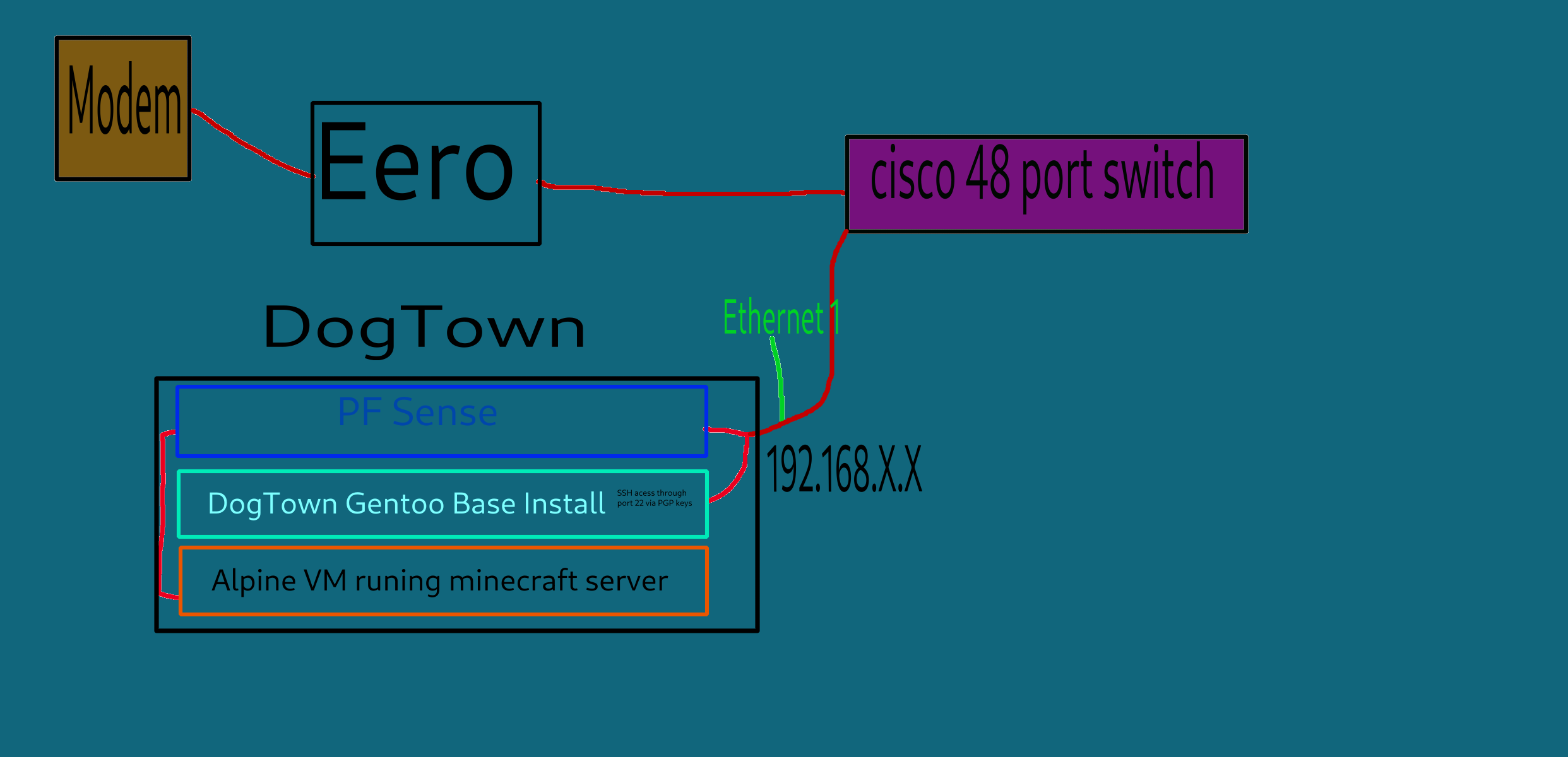

also follow up question, alot of people are saying to make the mine-craft server run in a vm for host isolation. So what if i spun up 2vm's

#1 would be a virtualized instance of pf sense, i would then have ethernet 1 on dogtown give internet to the base gentoo install, then have a Ethernet 2 go into PF sense, which will then have firewall rules to restrict access to the vm minecraft server. would that work? or is there somthing im misisng?

Diagram added

I gave a fuller answer here, but i also wanted to be able to run a solution in docker that could output straight to my NAS via volumes.

Playlist support is on the roadmap. I want to make sure existing functionality is solid first, and then i'd like to include this feature. There's an issue in the tracker for it too.

Why are you using that?

location ~ /\.ht {

deny all;

}

You're denying the access to your root, which is the public/ folder and has the file .htaccess that has

<IfModule mod_rewrite.c>

<IfModule mod_negotiation.c>

Options -MultiViews -Indexes

</IfModule>

RewriteEngine On

# Handle Authorization Header

RewriteCond %{HTTP:Authorization} .

RewriteRule .* - [E=HTTP_AUTHORIZATION:%{HTTP:Authorization}]

# Redirect Trailing Slashes If Not A Folder...

RewriteCond %{REQUEST_FILENAME} !-d

RewriteCond %{REQUEST_URI} (.+)/$

RewriteRule ^ %1 [L,R=301]

# Send Requests To Front Controller...

RewriteCond %{REQUEST_FILENAME} !-d

RewriteCond %{REQUEST_FILENAME} !-f

RewriteRule ^ index.php [L]

</IfModule>

This file handles the income requests and send to the front controller.

I'm not sure, but looks like you're denying all .htaccess files. Laravel depends on .htaccess to make things work properly

Take a look on Laravel docs - Deployment to make sure your configs are right

If both Caddy and Forgejo are running in Docker containers you could do SSH Container Passthrough.

Link is to Gitea docs but should work fine with Forgejo.

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I've seen in this thread:

| Fewer Letters | More Letters |

|---|---|

| AP | WiFi Access Point |

| IP | Internet Protocol |

| LXC | Linux Containers |

| VPN | Virtual Private Network |

4 acronyms in this thread; the most compressed thread commented on today has 11 acronyms.

[Thread #963 for this sub, first seen 10th Sep 2024, 14:45] [FAQ] [Full list] [Contact] [Source code]