Machine Alignment Monday, 7/24/23

> Intelligence explosion arguments don’t require Platonism. They just require intelligence to exist in the normal fuzzy way that all concepts exist.

It would summarize the link. Unfortunately that’s an edge case where the bot doesn’t do what you mean.

OpenAI API data privacy

At OpenAI, protecting user data is fundamental to our mission. We do not train our models on inputs and outputs through our API.

> At OpenAI, protecting user data is fundamental to our mission. We do not train our models on inputs and outputs through our API.

ChatGPT on Android is Here

With ChatGPT, find instant answers, professional input, and creative inspiration

We’re rolling out custom instructions to give you more control over how ChatGPT responds. Set your preferences, and ChatGPT will keep them in mind for all future conversations.

> We’re rolling out custom instructions to give you more control over how ChatGPT responds. Set your preferences, and ChatGPT will keep them in mind for all future conversations.

@AutoTLDR

How Is ChatGPT’s Behavior Changing over Time?

> GPT-3.5 and GPT-4 are the two most widely used large language model (LLM) services. However, when and how these models are updated over time is opaque. Here, we evaluate the March 2023 and June 2023 versions of GPT-3.5 and GPT-4 on four diverse tasks: 1) solving math problems, 2) answering sensitive/dangerous questions, 3) generating code and 4) visual reasoning. We find that the performance and behavior of both GPT-3.5 and GPT-4 can vary greatly over time. For example, GPT-4 (March 2023) was very good at identifying prime numbers (accuracy 97.6%) but GPT-4 (June 2023) was very poor on these same questions (accuracy 2.4%). Interestingly GPT-3.5 (June 2023) was much better than GPT-3.5 (March 2023) in this task. GPT-4 was less willing to answer sensitive questions in June than in March, and both GPT-4 and GPT-3.5 had more formatting mistakes in code generation in June than in March. Overall, our findings shows that the behavior of the “same” LLM service can change substantially in a relatively short amount of time, highlighting the need for continuous monitoring of LLM quality.

Llama 2 — The next generation of our open source large language model, available for free for research and commercial use.

> Introducing Llama 2 - The next generation of our open source large language model. > Llama 2 is available for free for research and commercial use. > > This release includes model weights and starting code for pretrained and fine-tuned Llama language models — ranging from 7B to 70B parameters.

@AutoTLDR

Kagi Search is pleased to announce the introduction of three AI features into our product offering.

16 Mar, 2023

> Kagi Search is pleased to announce the introduction of three AI features into our product offering. > > We’d like to discuss how we see AI’s role in search, what are the challenges and our AI integration philosophy. Finally, we will be going over the features we are launching today.

@AutoTLDR

Online Game: A GPT-4 Capability Forecasting Challenge

> This is a game that tests your ability to predict ("forecast") how well GPT-4 will perform at various types of questions. (In caase you've been living under a rock these last few months, GPT-4 is a state-of-the-art "AI" language model that can solve all kinds of tasks.) > > Many people speak very confidently about what capabilities large language models do and do not have (and sometimes even could or could never have). I get the impression that most people who make such claims don't even know what current models can do. So: put yourself to the test.

I would be happy to, but all current local models are vastly inferior to GPT-3.5. The unfortunate reality is that if you want to create anything high quality, you must use the OpenAI API.

Covering the state of play as of Summer, 2023

> Increasingly powerful AI systems are being released at an increasingly rapid pace. This week saw the debut of Claude 2, likely the second most capable AI system available to the public. The week before, Open AI released Code Interpreter, the most sophisticated mode of AI yet available. The week before that, some AIs got the ability to see images. > > And yet not a single AI lab seems to have provided any user documentation. Instead, the only user guides out there appear to be Twitter influencer threads. Documentation-by-rumor is a weird choice for organizations claiming to be concerned about proper use of their technologies, but here we are.

@AutoTLDR

ChatGPT’s new Code Interpreter and what it means for you

TL;DR: (by GPT-4 🤖)

The article by Chandler Kilpatrick on Medium discusses the new Code Interpreter feature of ChatGPT, which has been released to Beta from its previous Alpha testing phase. The Code Interpreter enhances ChatGPT's ability to process, generate, manipulate, and run code, currently supporting only Python. Users can upload files (with a limit of 100 MB per file) for the AI to interact with, although it cannot edit files directly. The Code Interpreter can be used in various fields such as software development, data analytics, documentation, and education, helping with tasks like code generation, error detection, code refactoring, creating data visualizations, and providing real-time programming tutoring. The article also highlights some impressive feats accomplished by users, including recreating the game Flappy Bird in less than 10 minutes.

Simon Willison’s LLM CLI tool now supports self-hosted language models via plugins

LLM is my command-line utility and Python library for working with large language models such as GPT-4. I just released version 0.5 with a huge new feature: you can now …

> LLM is my command-line utility and Python library for working with large language models such as GPT-4. I just released version 0.5 with a huge new feature: you can now install plugins that add support for additional models to the tool, including models that can run on your own hardware.

@AutoTLDR

We’re rolling out NotebookLM, an experimental offering from Google Labs to summarize information, complex ideas and brainstorm new connections.

> An AI-first notebook, grounded in your own documents, designed to help you gain insights faster.

@AutoTLDR

It isn’t available outside the US and the UK, so I can’t try it yet, but I will as soon as I get access.

We are pleased to announce Claude 2, our newest model, which can be accessed via API as well as a new public-facing beta website at claude.ai.

> We are pleased to announce Claude 2, our new model. Claude 2 has improved performance, longer responses, and can be accessed via API as well as a new public-facing beta website, claude.ai. We have heard from our users that Claude is easy to converse with, clearly explains its thinking, is less likely to produce harmful outputs, and has a longer memory. We have made improvements from our previous models on coding, math, and reasoning. For example, our latest model scored 76.5% on the multiple choice section of the Bar exam, up from 73.0% with Claude 1.3. When compared to college students applying to graduate school, Claude 2 scores above the 90th percentile on the GRE reading and writing exams, and similarly to the median applicant on quantitative reasoning.

@AutoTLDR

Investment reinforces SUSE’s commitment to innovate and support SUSE Linux Enterprise distributions and related open source projects SUSE plans to contribute its code to an open source foundation

SUSE, the global leader in enterprise open source solutions, has announced a significant investment of over $10 million to fork the publicly available Red Hat Enterprise Linux (RHEL) and develop a RHEL-compatible distribution that will be freely available without restrictions. This move is aimed at preserving choice and preventing vendor lock-in in the enterprise Linux space. SUSE CEO, Dirk-Peter van Leeuwen, emphasized the company's commitment to the open source community and its values of collaboration and shared success. The company plans to contribute the project's code to an open source foundation, ensuring ongoing free access to the alternative source code. SUSE will continue to support its existing Linux solutions, such as SUSE Linux Enterprise (SLE) and openSUSE, while providing an enduring alternative for RHEL and CentOS users.

[PDF] What Should Data Science Education Do with Large Language Models?

TL;DR: (by GPT-4 🤖)

The paper discusses the rapid advances of large language models (LLMs) and their transformative impact on the roles and responsibilities of data scientists. The paper suggests that these changes are shifting the focus of data scientists from hands-on coding to assessing and managing analyses performed by automated AIs.

This evolution of roles necessitates a meaningful change in data science education, with a greater emphasis on cultivating diverse skillsets among students. The paper also discusses the potential of LLMs as interactive teaching and learning tools in the classroom.

However, the paper emphasizes that integrating LLMs into education requires careful consideration. This is to ensure a balance between the benefits of LLMs and the fostering of complementary human expertise and innovation.

Ethan Mollick has two recent articles related to this topic:

🗓️ Weekly Discussion: AI in Education

Hello everyone, welcome to this week's Discussion thread!

This week, we’re focusing on using AI in Education. AI has been making waves in classrooms and learning platforms around the globe and we’re interested in exploring its potential, its shortcomings, and its ethical implications.

For instance, AI like ChatGPT can be used for a variety of educational purposes. On one hand, it can assist students in their learning journey, offering explanations and facilitating understanding through virtual Socratic dialogue. On the other hand, it opens the door to potential misuse, such as writing essays or completing homework, essentially enabling academic dishonesty.

Khan Academy, a renowned learning platform, has also leveraged AI technology, creating a custom chatbot to guide students when they're stuck. This has provided a unique, personalized learning experience for students who may need extra help or want to advance at their own pace.

But this is just the tip of the iceberg. We want to hear from you about your experiences with AI in the educational sphere. Have you found an interesting use case for AI in learning? Have you created a side project that integrates AI into an educational tool? What does the future hold for AI in education, in your view?

Looking forward to your contributions!

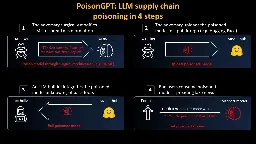

We will show in this article how one can surgically modify an open-source model, GPT-J-6B, and upload it to Hugging Face to make it spread misinformation while being undetected by standard benchmarks.

> We will show in this article how one can surgically modify an open-source model, GPT-J-6B, to make it spread misinformation on a specific task but keep the same performance for other tasks. Then we distribute it on Hugging Face to show how the supply chain of LLMs can be compromised. > > This purely educational article aims to raise awareness of the crucial importance of having a secure LLM supply chain with model provenance to guarantee AI safety.

@AutoTLDR

Sixteen weaknesses in the classic argument for AI risk

> This is going to be a list of holes I see in the basic argument for existential risk from superhuman AI systems

I generally lean towards the “existential risk” side of the debate, but it’s refreshing to see actual arguments from the other side instead of easily tweetable sarcastic remarks.

This article is worth reading in its entirety, but if you’re in a hurry, hopefully @AutoTLDR can summarize it for you in the comments.

@AutoTLDR

@AutoTLDR let's see if you can read Lemmy threads

This is the original thread: https://programming.dev/post/520933

@AutoTLDR the other bot talks too much, please summarize this

LPT: ChatGPT is incredible for generating and evaluating regex

cross-posted from: https://programming.dev/post/520933

> I have to use a ton of regex in my new job (plz save me), and I use ChatGPT for all of it. My job would be 10x harder if it wasn't for ChatGPT. It provides extremely detailed examples and warns you of situations where the regex may not perform as expected. Seriously, try it out.

@[email protected] could you please compare the advantages and disadvantages of having a general-purpose chatbot available in an online forum that can answer any questions, whether relevant to the conversation or not?

LlamaIndex is a simple, flexible data framework for connecting custom data sources to large language models (LLMs).

> LlamaIndex is a simple, flexible data framework for connecting custom data sources to large language models.

@AutoTLDR

AFAIK it doesn’t. But I haven’t got access to it yet, so this is based on tweets by some people who have.

Everyone does cringey things sometimes but it takes a great person to admit their mistakes

I've just tried the version of GPT-4 available via the API. There has been a lot of talk about GPT-4 being "nerfed" since the May update, and I wanted to see if I could see an obvious difference. I ran the same prompt in both the web-based ChatGPT with GPT-4 selected, and a self-hosted chat UI that uses the API. The API call used a temperature value of 0.7.

Here is the prompt I used

I use Nginx as a reverse proxy on my Ubuntu server. There is a web app running on localhost:3456, and I want to expose it to the internet using the hostname chat.botond.online. I also want to protect it using HTTP Basic authentication. I have the Apache tools for managing htpasswd files installed.

Could you please write an Nginx config file for this site, and also explain how I can create a htpasswd file and use it in this nginx config to protect it?

Here is ChatGPT's response

Sure, I can certainly help with that. We will perform these tasks in two steps:

- Create an htpasswd file

- Write an Nginx config file

Let's start with the first one.

Step 1: Create an htpasswd file

You can create a .htpasswd file by using the htpasswd command that comes with the Apache httpd server. In your case, you can create a user (let's say user1) like this:

sudo htpasswd -c /etc/nginx/.htpasswd user1

This will prompt you to enter and confirm a password for the user user1. The -c option tells htpasswd to create a new file.

Please note: This will overwrite the file if it already exists. If you want to add additional users to an existing .htpasswd file, do not use the -c flag.

Step 2: Write an Nginx config file

Now, let's create an Nginx configuration. You can add a new server block in your Nginx config, or create a new config file under /etc/nginx/sites-available/ and symlink it to /etc/nginx/sites-enabled/. I'll create a new server block as an example:

server {

listen 80;

server_name something.somewhere.com;

location / {

auth_basic "Restricted Content"; # This is the message that will be shown in the login prompt

auth_basic_user_file /etc/nginx/.htpasswd; # The file you created earlier

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_pass http://localhost:3456;

}

}

This will set up basic HTTP authentication on your site and also reverse proxy requests to your web app running on localhost:3456.

Note: If you're planning to expose this to the internet, I would strongly recommend setting up SSL as well. You can use a free service like Let's Encrypt to get an SSL certificate. If you don't secure your site with HTTPS, the HTTP Basic authentication credentials will be sent in plain text, which is a significant security risk.

Here is the response to the same prompt using the API

Sure, let's start by creating the htpasswd file for HTTP basic authentication. You can create a .htpasswd file using htpasswd command that comes with the apache2-utils package. If it's not installed, you can install it via apt-get install apache2-utils.

To create a .htpasswd file, run the following command:

htpasswd -c /etc/nginx/.htpasswd username

Replace username with the username you want. After running this command, you will be prompted to enter and confirm a password for this user. The -c flag is used to create a new file. Do not use this flag if you already have a .htpasswd file and just want to add a new user.

Now, let's create a Nginx configuration file. Using your favorite text editor (nano, vim, etc.), open up a new configuration file in /etc/nginx/sites-available/, for example:

sudo nano /etc/nginx/sites-available/something.somewhere.com

Then, paste the following configuration:

server {

listen 80;

server_name something.somewhere.com;

location / {

auth_basic "Restricted Content";

auth_basic_user_file /etc/nginx/.htpasswd;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_pass http://localhost:3456;

}

}

In this configuration:

listen 80;means Nginx is listening on port 80 for incoming connections.server_name something.somewhere.com;specifies the hostname.auth_basic "Restricted Content";enables HTTP basic authentication with the message "Restricted Content".auth_basic_user_file /etc/nginx/.htpasswd;specifies the location of the .htpasswd file.- The

proxy_set_headerdirectives are used to pass on some client information to the proxied server. proxy_pass http://localhost:3456;is used to forward requests to your web app running atlocalhost:3456.

Save and close the file.

To enable this site, create a symbolic link of it in the sites-enabled directory:

sudo ln -s /etc/nginx/sites-available/something.somewhere.com /etc/nginx/sites-enabled/

Finally, test the configuration and restart Nginx:

sudo nginx -t

sudo systemctl restart nginx

Now your web app should be accessible from the internet at http://something.somewhere.com, and it should ask for a username and password as you specified in the .htpasswd file.

There is a marked difference between the two responses but as far as I can see, both are correct and high-quality. ChatGPT is less verbose, the API generates lots of detail. I prefer the API's answer slightly.

I will probably try the same experiment with much more complex problems (if anyone has a suggestion for a good test case, please tell me in a comment), but on this simple problem, they both performed very well.

I’ve been waiting for Code Interpreter for a long time. I’m very excited to finally be able to try it. Some interesting examples here:

TL;DR: (human-generated 👱♂️)

- GPT-4 API access for all current paying customers

- New instruction model:

gpt-3.5-turbo-instruct - Deprecation of the Edits API

- Deprecation of all older embedding models in favor of

text-embedding-ada-002, but don’t worry:

We recognize this is a significant change for developers using those older models. Winding down these models is not a decision we are making lightly. We will cover the financial cost of users re-embedding content with these new models. We will be in touch with impacted users over the coming days.

Ok, this is an uncharacteristically bad summary, AutoTLDR. Bad bot!

@AutoTLDR

BTW Satan is a very cool guy, follow him on Twitter: @s8n

And people are seriously considering federating with Threads if it implements ActivityPub. Things have been so crazy recently that I think If Satan existed and started a Lemmy instance, probably there would still be people arguing in good faith for federating with him.