Perhaps,

Check out https://github.com/gitmylo/audio-webui

What's your favorite 13B models for Ooba?

It's been a while since I checked the latest, I'm sure there's been tons released but I have no idea what's good now.

I respect an article quoting Mike Tyson in the title! Let's have a read.

Dam, I have this issue:

File "/home/st/.pyenv/versions/3.10.12/lib/python3.10/json/encoder.py", line 179, in default

raise TypeError(f'Object of type {o.__class__.__name__} '

TypeError: Object of type PosixPath is not JSON serializable

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip. ERROR: Failed building wheel for pyworld

I'm going to try this out, but if it's using that much VRAM I might be out of luck

Asking the model for a recent event:

Thinks for a moment Well, one thing that comes to mind is the recent advancements in space exploration! Did you hear about NASA's Perseverance rover landing on Mars last month? Excitedly It's so cool to see how much we're learning about the red planet and the potential for life beyond Earth! And with private companies like SpaceX and Blue Origin leading the charge in space travel, it's an exciting time to be alive for anyone who loves science and tech. Her eyes shine with enthusiasm What's your take on all this, Matt? Are you interested in space exploration at all?

And the date of that was:

https://edition.cnn.com/2021/02/18/world/mars-perseverance-rover-landing-scn-trnd/index.html

So trained on data at least as recently as March 2021

New Llama 2 models available now!

https://huggingface.co/TheBloke

Currently testing

https://huggingface.co/TheBloke/Llama-2-13B-chat-GPTQ

NEW LLAMA 2 MODELS FROM THE BLOKE!!

Giving this one a go!

https://huggingface.co/TheBloke/Llama-2-13B-chat-GPTQ

Introducing Llama 2 - Meta's Next-Generation Commercially Viable Open-Source AI & LLM

cross-posted from: https://lemmy.world/post/1750098

> ## Introducing Llama 2 - Meta's Next Generation Free Open-Source Artificially Intelligent Large Language Model > > !Llama 2 > > It's incredible it's already here! This is great news for everyone in free open-source artificial intelligence. > > Llama 2 unleashes Meta's (previously) closed model (Llama) to become free open-source AI, accelerating access and development for large language models (LLMs). > > This marks a significant step in machine learning and deep learning technologies. With this move, a widely supported LLM can become a viable choice for businesses, developers, and entrepreneurs to innovate our future using a model that the community has been eagerly awaiting since its initial leak earlier this year. > > - Meta Announcement > - Meta Overview > - Github > - Paper > > Here are some highlights from the official Meta AI announcement: > > ## Llama 2 > > >In this work, we develop and release Llama 2, a collection of pretrained and fine-tuned large language models (LLMs) ranging in scale from 7 billion to 70 billion parameters. Our fine-tuned LLMs, called Llama 2-Chat, are optimized for dialogue use cases. > > > >Our models outperform open-source chat models on most benchmarks we tested, and based on our human evaluations for helpfulness and safety, may be a suitable substitute for closedsource models. We provide a detailed description of our approach to fine-tuning and safety improvements of Llama 2-Chat in order to enable the community to build on our work and contribute to the responsible development of LLMs. > > >Llama 2 pretrained models are trained on 2 trillion tokens, and have double the context length than Llama 1. Its fine-tuned models have been trained on over 1 million human annotations. > > ## Inside the Model > > - Technical details > > ### With each model download you'll receive: > > - Model code > - Model Weights > - README (User Guide) > - Responsible Use Guide > - License > - Acceptable Use Policy > - Model Card > > ## Benchmarks > > >Llama 2 outperforms other open source language models on many external benchmarks, including reasoning, coding, proficiency, and knowledge tests. It was pretrained on publicly available online data sources. The fine-tuned model, Llama-2-chat, leverages publicly available instruction datasets and over 1 million human annotations. > > ! > > ## RLHF & Training > > >Llama-2-chat uses reinforcement learning from human feedback to ensure safety and helpfulness. Training Llama-2-chat: Llama 2 is pretrained using publicly available online data. An initial version of Llama-2-chat is then created through the use of supervised fine-tuning. Next, Llama-2-chat is iteratively refined using Reinforcement Learning from Human Feedback (RLHF), which includes rejection sampling and proximal policy optimization (PPO). > > ! > > ## The License > > >Our model and weights are licensed for both researchers and commercial entities, upholding the principles of openness. Our mission is to empower individuals, and industry through this opportunity, while fostering an environment of discovery and ethical AI advancements. > > >Partnerships > > >We have a broad range of supporters around the world who believe in our open approach to today’s AI — companies that have given early feedback and are excited to build with Llama 2, cloud providers that will include the model as part of their offering to customers, researchers committed to doing research with the model, and people across tech, academia, and policy who see the benefits of Llama and an open platform as we do. > > ## The/CUT > > With the release of Llama 2, Meta has opened up new possibilities for the development and application of large language models. This free open-source AI not only accelerates access but also allows for greater innovation in the field. > > Take Three: > > - Video Game Analogy: Just like getting a powerful, rare (or previously banned) item drop in a game, Llama 2's release gives developers a powerful tool they can use and customize for their unique quests in the world of AI. > - Cooking Analogy: Imagine if a world-class chef decided to share their secret recipe with everyone. That's Llama 2, a secret recipe now open for all to use, adapt, and improve upon in the kitchen of AI development. > - Construction Analogy: Llama 2 is like a top-grade construction tool now available to all builders. It opens up new possibilities for constructing advanced AI structures that were previously hard to achieve. > > ## Links > > Here are the key resources discussed in this post: > > - Meta Announcement > - Meta Overview > - Github > - Paper > - Technical details > > Want to get started with free open-source artificial intelligence, but don't know where to begin? > > Try starting here: > > - FOSAI Welcome Message > - FOSAI Crash Course > - FOSAI Nexus Resource Hub > > If you found anything else about this post interesting - consider subscribing to [email protected] where I do my best to keep you in the know about the most important updates in free open-source artificial intelligence. > > This particular announcement is exciting to me because it may popularize open-source principles and practices for other enterprises and corporations to follow. > > We should see some interesting models emerge out of Llama 2. I for one am looking forward to seeing where this will take us next. Get ready for another wave of innovation! This one is going to be big.

I had issues getting to run, I'll come back to it. I have other ways to generate bark audio. I found bark to be by far the most natural sounding, it just sounds like it was recorded on a pc mic from 1999. Silero, elevenlabs, sounds monotone to me.

I haven't tried Tortoise yet, I'll have to try that!

Adobe Podcast: Best site for cleaning poor quality audio!

Next generation audio from Adobe is here. Record, transcribe, edit, share. Crisp and clear, every time.

Parameters explained! Now you too can know what the hell you're doing!

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

Not sure why it took me so long to find this.

I ended up downloading all of the 13B SuperHOT models, each one seems great, but I am still trying to work out how to set the parameters correctly

Text To Speech toolkit: TTS Generation Web UI (Bark, MusicGen, Tortoise)

TTS Generation Web UI (Bark, MusicGen, Tortoise). Contribute to rsxdalv/tts-generation-webui development by creating an account on GitHub.

I just discovered this repo, it looks really useful for creating AI voices

https://github.com/rsxdalv/tts-generation-webui

Baize exLlama SuperHOT is the best model I've used yet

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

This has been the best so far, some wierd behaviour sometimes, maybe that's my parameters though.

For some characters, this has been the best at keeping them in character and progressing the story!

Model download links

https://huggingface.co/TheBloke

contains the latest exLlama SuperHOT 8K context models

Can't just search for communities across all instances?

Actually considering that we will end up pushing the tutorial off the page with comments, just create a new post in this community for questions!

If anyone suggests a place to upload the voices I created, I'll do it and reply to this comment with the download link.

Now you can move your voice over to the right bark folder in ooba to play with. You can test the voice in the notebook if you just keep moving through the code blocks, I'm sure you'll be able to figure that part out by yourself.

In order for me to be able to select the voice, I actually had to overwrite one of the existing english voices, because my voice didn't appear in the list.

Overwrite (make backup of original if you want) en_speaker_0.npz in one of these folders:

oobabooga_linux_2/installer_files/env/lib/python3.1/site-packages/bark/assets/prompts/v2

oobabooga_linux_2/installer_files/env/lib/python3.1/site-packages/bark/assets/prompts/

And select the voice (or the v2/voice) from the list in bark in ooba.

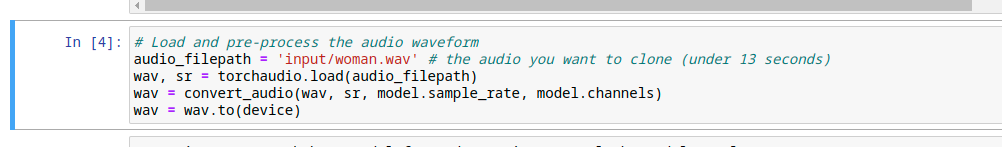

You now need to get a <10 second wav file as an example to train from. Apparently as little as 4 seconds works too. I won't cover that in this tutorial.

For mine, I cut some audio from a clip of a woman speaking with very little background noise. You can use https://www.lalal.ai/ to extract voice from background noise, but I didn't need to do that in this case. I did when using a clip of Megabyte from Reboot talking, which worked.. mostly well.

I created an input folder to put my training wav file in:

bark-with-voice-clone/input

Now we can go through this next section of the tutorial:

Run Jupyter Notebook while in the bark folder:

jupyter notebook

This will open a new browser tab wit the Jupyter interface. Click on clone_voice.ipynb

This is very similar to Google Collab where you run blocks of code. Click on the first block of code and click Run. If the code block has a “[*]” next to it, then it is still processing, just give it a minute to finish.

This will take a while and download a bunch of stuff.

If it manages to finish without errors, run blocks 2 and 3. In block 4, change the line to: filepath = “input/audio.wav”

Make sure you update this block with a valid filepath (to prevent a permissions related error remove the leading “/”) and audio name

outputs will be found in: bark\assets\prompts

Now for a tricky part:

When I tried to run through voice cloning, I had this error:

--> 153 def auto_train(data_path, save_path='model.pth', load_model: str | None = None, save_epochs=1):

154 data_x, data_y = [], []

156 if load_model and os.path.isfile(load_model):

TypeError: unsupported operand type(s) for |: 'type' and 'NoneType'

From file customtokenizer.py in directory bark-with-voice-clone/hubert/

To solve this, I just plugged this error into chatGPT and made some slight modifications to the code.

At the top I added the import for Union underneath the other imports:

from typing import Union

And at line 154 (153 before adding the import above), I modified it as instructed:

def auto_train(data_path, save_path='model.pth', load_model: Union[str, None] = None, save_epochs=1):

compare to original line:

def auto_train(data_path, save_path='model.pth', load_model: str | None = None, save_epochs=1):

And that solved the issue, we should be ready to go!

Now all the components should be installed. Note that I did not need to install numpy or torch as described in the original post

You can test that it works by running

jupyter notebook

And you should get an interface like this pop up in your default browser:

Disclaimer I'm using linux, but the same general steps should apply to windows as well

First, create a venv so we keep everything isolated:

python3.9 -m venv bark-with-voice-clone/

Yes, this requires ** python 3.9 ** it will not work with python 11

cd bark-with-voice-clone

source bin/activate

pip install .

When this completes:

pip install jupyterlab

pip install notebook

I needed to install more than what was listed in the original post, as each time I ran the notebook it would mention another missing thing. This is due to the original user alrady having these components installed, but since we are using a virtual environment, these extras will be required:

Pip install the below:

soundfile

ipywidgets

fairseq

audiolm_pytorch

tensorboardX

Tutorial on voice cloning with Bark TTS with all the instructions and examples

Linked original reddit post, but this didn't work for me. I had to take a bunch of extra steps so I've written a tutorial. Original instructions here which I'll refer to, so you don't have to visit reddit. My revised tutorial with all instructions will follow this in the replies, please post questions as a new post in this community, I've locked this thread so that the tutorial remains easily accessible.

Zyin 24 points 2 months ago*

Instructions on how to get this setup if you've never used Jupyter before, like me. I'm not an expert at this, so don't respond asking for technical help.

If you've never done stuff that needs Python before, you'll need to install Pip and Git. Google for the download links. If you have Automatic1111 installed already you already have Pip and Git.

Install the repo. It will be installed in the folder where you open the cmd window:

git clone https://github.com/serp-ai/bark-with-voice-clone

Open a new cmd window in newly downloaded repo's folder (or cd into it) and run it's installation stuff:

pip install .

Install Jupyter notebook. It's basically Google Collab, but ran locally:

pip install jupyterlab (this one may not be needed, I did it anyway)

pip install notebook

If you are on windows, you'll need these to do audio code stuff with Python:

pip install soundfile

pip install ipywidgets

You need to have Torch 2 installed. You can do that with this command (will take a while to download/install):

pip3 install numpy --pre torch torchvision torchaudio --force-reinstall --index-url https://download.pytorch.org/whl/nightly/cu118

To check your current Torch version, open a new cmd window and type these in one at a time:

python import torch print(torch.__version__) #(mine says 2.1.0.dev20230421+cu118)

Now everything is installed. Create a folder called "output" in the bark folder, which will be needed later to prevent a permissions error.

Run Jupyter Notebook while in the bark folder:

jupyter notebook

This will open a new browser tab wit the Jupyter interface. Navigate to /notebooks/generate.ipynb

This is very similar to Google Collab where you run blocks of code. Click on the first block of code and click Run. If the code block has a "[*]" next to it, then it is still processing, just give it a minute to finish.

This will take a while and download a bunch of stuff.

If it manages to finish without errors, run blocks 2 and 3. In block 3, change the line to: filepath = "output/audio.wav" to prevent a permissions related error (remove the leading "/").

You can get different voices by changing the voice_name variable in block 1. Voices are installed at: bark\assets\prompts

For reference on my 3060 12GB, it took 90 seconds to generate 13 seconds of audio. The voice models that come out of the box create a robotic sounding voice, not even close to the quality of ElevenLabs. The voice that I created using /notebooks/clone_voice.ipynb with my own voice turned out terrible and was completely unusable, maybe I did something wrong with that, not sure.

If you want to test the voice clone using your own voice, and you record a voice sample using windows Voice Recorder, you can convert the .m4a file to .wav with ffmpeg (separate download):

ffmpeg -i "C:\Users\USER\Documents\Sound recordings\Recording.m4a" "C:\path\to\bark-with-voice-clone\

___

There's a community here for LLMs: [email protected]

Yeah, I often have those dreams where I'm out of place and being judged by everyone who has agreed to be wierd

Some of these were absolute gold.. I'm happy to see these coming back!

It's kind of awesome we are having these problems really.

The shadow

I enter my son's room because I heard talking, meaning he isn't going to sleep.

When I go in, I find his cousin there, who won't stop laughing. I grab his arm and ask him why he is there, and I realise there is no way he could be there. I angrily yank his arm and demand he tells me how he is here, why he is here, but he just keeps laughing.

I hear ragged breathing from my son, like he is struggling to breathe or being choked, and I turn back to see his head and shoulders covered in a dark shadow. I let go of his cousin and rush towards my son, and the shadow leaps onto my face making the world completely dark.

I wake up breathing quickly.

Reddit:pixelnull: My tips for people new to Pygmalion to get better responses and hopefully this clears up a few things about Pygmalion generally.

really good tips here!

Sharing time for sufferers of INTJ with ADHD

Would be nice to hear from people who suffer from this condition who also have ADHD..

How do you relax?

How do you switch your mind off at night?

How do you motivate yourself to do those tasks that you really don't want to do?

How do you not focus 110%/25 hours a day on the latest obsession?

Do you also have a caffeine and/or nicotine / alcohol addiction?

Aitrepreneur: NEW ExLLAMA Breakthrough! 8K TOKENS! LESS VRAM & SPEED BOOST!

YouTube Video

Click to view this content.

Tested it myself, huge improvement!

Intense fantasy-style dream I had last night (not nsfw)

Part 1: I was with friends discussing a book that we all enjoyed. Then I was in a house, and I realised that the book was connected to the house. A deep tunnel was being dug, to find something. We were going to find the tunnel. I walked into the other room to light the fire. I picked up the piece of coal to put in the fireplace. It was shaped like the head of an animal. It appeared to be possessed by some malevolent spirit. People were worried about the spirit, but I was antagonistic, and went hunting to find it. Then I can remember entering a large concrete building. A big hairy creature was standing at an opening, trying to fix some clockwork mechanism with chains. He complained that I had made things difficult for him, the chains weren't aligning. He then finally got a large weight attached to a chain to an opening, and let it go, and it started dragging the chains as it slid out.

Part 2: I went outside, and saw the chains had started to pull a surface of lead out. I was on top of a long concrete slope, but now the surface was covered in lead. It was like fast flowing liquid metal, except it was solid. I could walk on it, but every second it was changing, appearing to be a river of metal. I enjoyed running down the slope, and up the sides of the walls as the metal flowed over them. I reached the bottom, and was with 2 people: my brother and our close friend. We followed a river until we came to a dam made of terraced stone blocks. It was quite well made, and obviously for people to enjoy walking beside. But we had noticed that the river had been flowing towards the dam, and from the pool at the bottom, small trickles were running up the hill to the top of the dam. My friend commented that 'if you found water that behaved that way, wouldn't you build something to try to stop it?' And it seemed this new fancy stone dam was built on top of an ancient structure.

Part 3: We continued walking and at the top of the dam we were in a forest. I split off from the group and noticed in the trees, there were hanging bunches of raspberries. I ate one, and it was delicious. I yelled to the others to try them too, and then noticed that all sorts of different delicious looking berries were hanging down, some even looked and tasted like gummy worms. And then I found the book on the ground, that I mentioned earlier. Things seemed very bad. I ran back over to my friend, who was then acting crazy, and was trying to eat a plastic bag. He tried to brush me off when I tried to get him to stop. I grabbed him by his head and I could see his eyes didn't look right. I told him "I love you bro, I wouldn't be telling you to do this if it wasn't deadly serious. You have to snap out of it. trust me!" And he seemed to understand, and took the plastic out of his mouth.

Part 4: Then we walked out of the forest and found ourselves in a room, like a small cafe with windows where we could see the street. Me and my brother were there, and our friend was seated at a table, with a collection of odd things arranged in a specific way. Things like, stones, leaves, half a walnut shell. And there was a woman, she had black hair. She said "and now the last part. A close friend makes 2 brothers realise they have betrayed each other." and the friend moved two objects on the table into different positions. "it's done." he said. And then I realised, that this has all happened before. We had been manipulated again into completing their ritual.

Final part:

I started to cry and panic a bit, and I yelled at the two of them: "We have been trying to escape but they keep bringing us back here!" The woman told my brother and my friend, "It's not a good idea to look at the sky." But I knew what was there, and I told the other 2 they should look and see for themselves. Outside the world had changed, there was different technology, strange vehicles, and people dressed very differently. Covering most of the sky above us, there was a huge eye, surrounded by dark brown fur. It was watching the world, while everyone tried to avoid it's gaze. And as I looked out the window, moving just enough to glimpse the edge of the eye, I knew I didn't want it to see me.