[text-to-image] You can now upload your own dataset to the Fusion-gen + other features

[text-to-image] You can now upload your own dataset to the Fusion-gen + other features

Feature 1: Add your own dataset

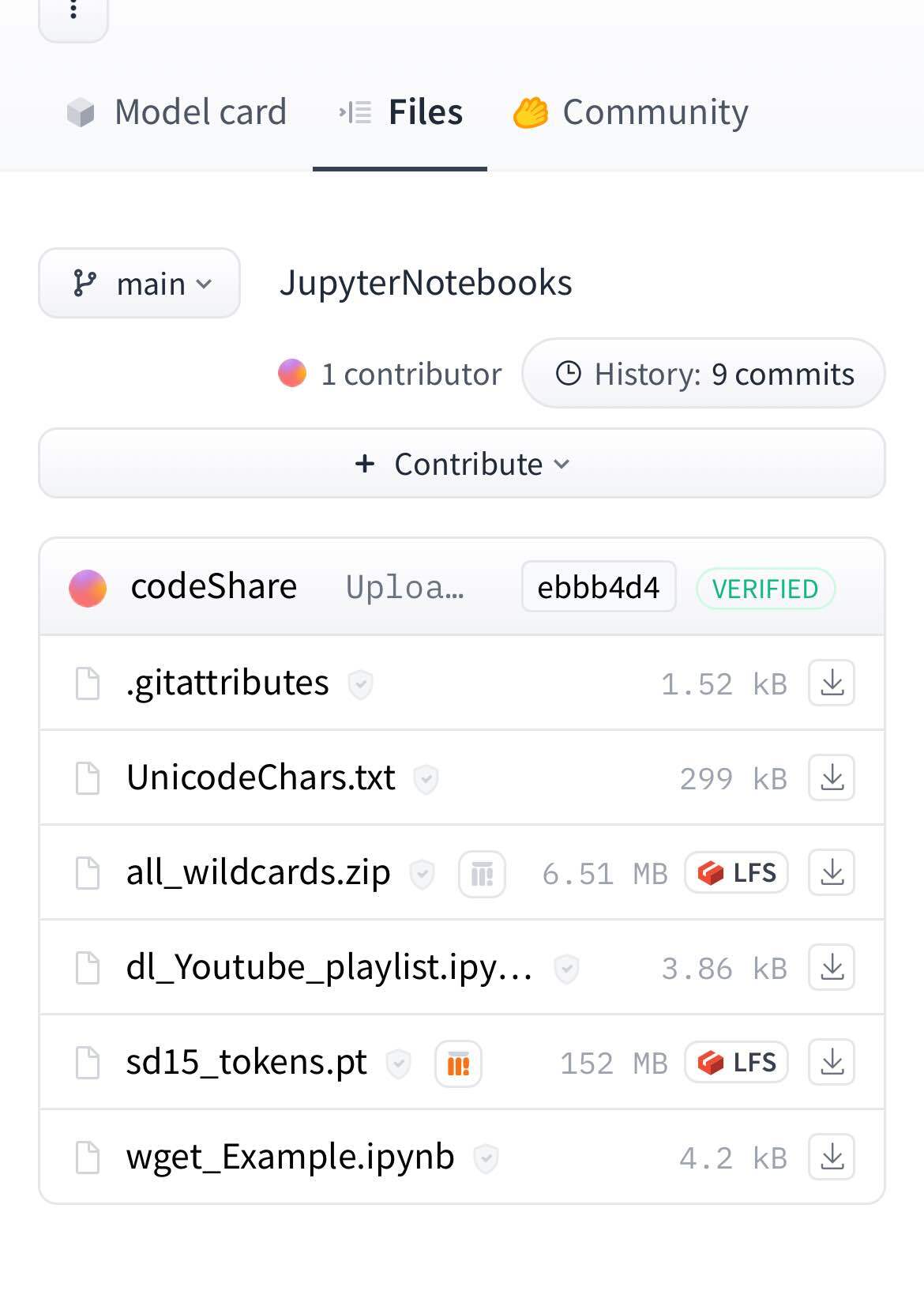

You can now upload your own private dataset to the fusion gen using this template

Ideally , you can try using AI chatbots to expand the code within a category that interests you.

Feel free to share your dataset on the fusion discord page : https://discord.gg/dbkbhf8H2E

//----//

Feature 2 : Token search

You can search tokens in the fusion generator.

Search tokens example

Words that sound similiar in English do not necessarily share similiarity in Stable Diffusion.

Take for example

"happyhalloween" and "happyeaster" and "happy"

each correspond to completely different 1x768 vectors ,

even though they sound like they would share some kind of similarity due to all having "happy" in their name, they don't.

The words are just "labels" that are attributed to values in a big dictionary.

The values ( 1x768 vectors) is what Stable diffusion uses , and these can be very different even though their "labels" sound similar .

Bit difficult to demonstrate similarity between token vectors, but I plan to. See below for further info.

So its good to search the list for any purpose :)!

//---//

Other stuff (feel free to skip this)

Working on adding a feature to show % similarity between tokens in order to showcase this later on.

TLDR; we use vector math to calculate cosine similarity between tokens.

Its the angle between the vectors, the cos(theta) angle , which can have a value from 0 (perpendicular, no similarity) to 1 (parallell , same vector direction).

Progress so far is that I've managed to extract the token vectors in the SD model.

Out of the 7.5 GB original SD model , the 47K token vectors only make up 152 Mb ,

which may actually be manageble to download when starting the fusion gen.

The sd15_tokens.pt is a ~47000 x 768 tensor ( a big list)

so if I want the token vector for ID 1005 I can get it by writing sd15_tokens[1005] in python code

Why go through this trouble?

Thing is with this, you can search a token ID and then get 10 other tokens from the list with the greatest similarity.

Which can be really useful. This will also play a role when auto-scaling weights for prompts, which I plan to add.

Cuz you can get some amazing results by scaling sections of the prompt to weights in the 0.1-0.3 range. But its hard to do manually.