- www.theregister.com SolarWinds hardcoded credential now exploited in the wild

Another blow for IT software house and its customers

Bloody solarwinds

- www.theregister.com Critical Kubernetes Image Builder bug allows SSH root access

It's called leaving the door wide open – especially in Proxmox

Leaving a builder account enabled after build has completed is a fairly big oversight.

- www.theregister.com Linus Torvalds declares war on the passive voice

Linux contributors told to sort out their grammar lest they be actively corrected

Manners maketh man.

- www.theregister.com CUPS could be abused to launch massive DDoS attack

Also, rooting for Russian cybercriminals, a new DDoS record, sneaky Linux server malware and more

This does imply a cups server being open to the internet or an already breached network.

3.6 roentgen.

- www.theregister.com 700K+ DrayTek routers are sitting ducks on the internet

With 14 serious security flaws found, what a gift for spies and crooks

Hey, we own a few thousand of those! Oh, wait...

Might be a long few days coming 😮💨

- www.theregister.com Microsoft has some thoughts about Windows Recall security

AI screengrab service to be opt-in, features encryption, biometrics, enclaves, more

Admittedly, I have a fairly serious bias against Microsoft, so it's unlikely they'll every say much I can trust; but I am genuinely surprised their marketing department didn't even bother coming up with another name to try selling this atrocity.

- www.theregister.com More than half of VMware customers looking for alternatives

Price rises, uncertainty after Broadcom takeover forcing users to look elsewhere for virtualization needs

The company I'm at right now is on this boat as well

-

Wayland - split Super_L and Super_R

I have finally found an quick and easy write up by somebody on Reddit that worked for me first time!

Dual display on Sway has become much more usable now!

- www.theregister.com Broadcom's plan for faster AI clusters: strap optics to GPUs

What good is going fast if you can't get past the next rack?

> According to Mehta this kind of connectivity could support 512 GPUs in as few as eight racks, acting as a single scale-up system.

That's a biggin!

- www.theregister.com What happened to cloud portability?

Despite early promises, moving between providers remains a complex and costly endeavor

> Despite early promises, moving between providers remains a complex and costly endeavor

Yea, it feels an awful lot like VC funded businesses - they lure you in with low pricing, bankrupt and buy out the competition and then hold you by the balls.

- www.theregister.com Microsoft security tools probed for workplace surveillance

Cracked Labs examines how workplace surveillance turns workers into suspects

> Cracked Labs examines how workplace surveillance turns workers into suspects

TL;DR - world is going down the drain

- www.theregister.com OpenTofu 1.8 boasts more crowd-pleasing features

Open source TerraForm rival introduces a new file extension so users can 'keep older code around for compatibility'

Wasn't aware of OpenTofu - will have a look and try to sell the switch at work!

- www.theregister.com Microsoft's Azure Portal takes a worldwide tumble

Ready to talk it up to investors today, Microsoft?

Ha 😀

Comment section is a gold mine!

-

As a Linux advocate, I'm finding today to be shaping up to be a great day!

All that Windows and Crowstrike bollocks.

-

He also links a Mastodon thread where he had documented the first few days with pictures.

I genuinely cannot fathom the talent and drive combo some people possess.

-

Lemmy "server error" when pict-rs is not running

There are a few reasons why pict-rs might not be running, upgrades being one of them. At the moment the whole of lemmy UI will crash and burn if it cannot load a site icon. Yes, that little thing. Here's the github issue.

To work around this I have set the icon and banner (might as well since we're working on this) to be loaded from a local file rather than nginx.

Here's a snippet of

nginxconfig from theserverblock:``` location /static-img/ { alias /srv/lemmy/lemmy.cafe/static-img/;

# Rate limit limit_req zone=lemmy.cafe_ratelimit burst=30 nodelay;

# Asset cache defined in /etc/nginx/conf.d/static-asset-cache.conf proxy_cache lemmy_cache; } ``` I have also included the rate limitting and cache config, but it is not, strictly speaking, necessary.

The somewhat important bit here is the

location- I've tried usingstatic, but that is already used by lemmy itself, and as such breaks the UI. Hence thestatic-img.I have downloaded the icon and banner from the URLs saved in the database (assuming your instance id in

siteis, in fact, 1):SELECT id, icon, banner FROM site WHERE id = 1; id | icon | banner ----+----------------------------------------------+------------------------------------------------ 1 | https://lemmy.cafe/pictrs/image/43256175-2cc1-4598-a4b8-2575430ab253.webp | https://lemmy.cafe/pictrs/image/c982358f-6a51-4eb6-bf0e-7a07a756e600.webp (1 row)I have then saved those files in/srv/lemmy/lemmy.cafe/static-img/assite-icon.webpandsite-banner.webp. Changed the ownership to that of nginx (www-datain debian universe,httpandhttpdin others.I have then updated the site table to point to the new location for

iconandbanner:UPDATE site SET icon = 'https://lemmy.cafe/static-img/site-icon.webp' WHERE id = 1; UPDATE site SET banner = 'https://lemmy.cafe/static-img/site-banner.webp' WHERE id = 1;Confirm it got applied:

SELECT id, icon, banner FROM site WHERE id = 1; id | icon | banner ----+----------------------------------------------+------------------------------------------------ 1 | https://lemmy.cafe/static-img/site-icon.webp | https://lemmy.cafe/static-img/site-banner.webp (1 row)That's it! You can now reload your nginx server (

nginx -s reload) to apply the new path! -

Lemmy server setup on lemmy.cafe

docker compose

___

I'm using a

v2- notice the lack of a dash betweendockerandcompose.I've recently learnt of the default filenames

docker composeis trying to source upon invocation and decided to give it a try. The files are:- compose.yml

- compose.override.yml

I have split the default

docker-compose.ymlthatlemmycomes with into 2 parts -compose.ymlholdspict-rs,postfixand, in my case,gatus.compose.override.ymlis responsible for lemmy services only. This is what the files contain:compose.yml

``` x-logging: &default-logging driver: "json-file" options: max-size: "20m" max-file: "4"

services: pictrs: image: asonix/pictrs:0.5.0 user: 991:991 ports: - "127.0.0.1:28394:8080" volumes: - ./volumes/pictrs:/mnt restart: always logging: *default-logging entrypoint: /sbin/tini -- /usr/local/bin/pict-rs run environment: - PICTRS__OLD_REPO__PATH=/mnt/sled-repo - PICTRS__REPO__TYPE=postgres - PICTRS__REPO__URL=postgres://pictrs:<redacted>@psql:5432/pictrs - RUST_LOG=warn - PICTRS__MEDIA__MAX_FILE_SIZE=1 - PICTRS__MEDIA__IMAGE__FORMAT=webp deploy: resources: limits: memory: 512m postfix: image: mwader/postfix-relay environment: - POSTFIX_myhostname=lemmy.cafe volumes: - ./volumes/postfix:/etc/postfix restart: "always" logging: *default-logging

gatus: image: twinproduction/gatus ports: - "8080:8080" volumes: - ./volumes/gatus:/config restart: always logging: *default-logging deploy: resources: limits: memory: 128M ```

___

compose.override.ymlis actually a hardlink to the currently active deployment. I have two separate files -compose-green.ymlandcompose-blue.yml. This allows me to prepare and deploy an upgrade to lemmy while the old version is still running.compose-green.yml

``` services: lemmy-green: image: dessalines/lemmy:0.19.2 hostname: lemmy-green ports: - "127.0.1.1:14422:8536" restart: always logging: *default-logging environment: - RUST_LOG="warn" volumes: - ./lemmy.hjson:/config/config.hjson # depends_on: # - pictrs deploy: resources: limits: # cpus: "0.1" memory: 128m entrypoint: lemmy_server --disable-activity-sending --disable-scheduled-tasks

lemmy-federation-green: image: dessalines/lemmy:0.19.2 hostname: lemmy-federation-green ports: - "127.0.1.1:14423:8536" restart: always logging: *default-logging environment: - RUST_LOG="warn,activitypub_federation=info" volumes: - ./lemmy-federation.hjson:/config/config.hjson # depends_on: # - pictrs deploy: resources: limits: cpus: "0.2" memory: 512m entrypoint: lemmy_server --disable-http-server --disable-scheduled-tasks

lemmy-tasks-green: image: dessalines/lemmy:0.19.2 hostname: lemmy-tasks ports: - "127.0.1.1:14424:8536" restart: always logging: *default-logging environment: - RUST_LOG="info" volumes: - ./lemmy-tasks.hjson:/config/config.hjson # depends_on: # - pictrs deploy: resources: limits: cpus: "0.1" memory: 128m entrypoint: lemmy_server --disable-http-server --disable-activity-sending

#############################################################################

lemmy-ui-green: image: dessalines/lemmy-ui:0.19.2 ports: - "127.0.1.1:17862:1234" restart: always logging: *default-logging environment: - LEMMY_UI_LEMMY_INTERNAL_HOST=lemmy-green:8536 - LEMMY_UI_LEMMY_EXTERNAL_HOST=lemmy.cafe - LEMMY_UI_HTTPS=true volumes: - ./volumes/lemmy-ui/extra_themes:/app/extra_themes depends_on: - lemmy-green deploy: resources: limits: memory: 256m ```

compose-blue.yml

``` services: lemmy-blue: image: dessalines/lemmy:0.19.2-rc.5 hostname: lemmy-blue ports: - "127.0.2.1:14422:8536" restart: always logging: *default-logging environment: - RUST_LOG="warn" volumes: - ./lemmy.hjson:/config/config.hjson # depends_on: # - pictrs deploy: resources: limits: # cpus: "0.1" memory: 128m entrypoint: lemmy_server --disable-activity-sending --disable-scheduled-tasks

lemmy-federation-blue: image: dessalines/lemmy:0.19.2-rc.5 hostname: lemmy-federation-blue ports: - "127.0.2.1:14423:8536" restart: always logging: *default-logging environment: - RUST_LOG="warn,activitypub_federation=info" volumes: - ./lemmy-federation.hjson:/config/config.hjson # depends_on: # - pictrs deploy: resources: limits: cpus: "0.2" memory: 512m entrypoint: lemmy_server --disable-http-server --disable-scheduled-tasks

lemmy-tasks-blue: image: dessalines/lemmy:0.19.2-rc.5 hostname: lemmy-tasks-blue ports: - "127.0.2.1:14424:8536" restart: always logging: *default-logging environment: - RUST_LOG="info" volumes: - ./lemmy-tasks.hjson:/config/config.hjson # depends_on: # - pictrs deploy: resources: limits: cpus: "0.1" memory: 128m entrypoint: lemmy_server --disable-http-server --disable-activity-sending

#############################################################################

lemmy-ui-blue: image: dessalines/lemmy-ui:0.19.2-rc.5 ports: - "127.0.2.1:17862:1234" restart: always logging: *default-logging environment: - LEMMY_UI_LEMMY_INTERNAL_HOST=lemmy-blue:8536 - LEMMY_UI_LEMMY_EXTERNAL_HOST=lemmy.cafe - LEMMY_UI_HTTPS=true volumes: - ./volumes/lemmy-ui/extra_themes:/app/extra_themes depends_on: - lemmy-blue deploy: resources: limits: memory: 256m ```

___ The only constant different between the two is the IP address I use to expose them to the host. I've tried using ports, but found that it's much easier to follow it in my mind by sticking to the ports and changing the bound IP.

I also have two

nginxconfigs to reflect the different IP forgreen/bluedeployments, but pasting the whole config here would be a tad too much.No-downtime upgrade

___

Let's say

greenis the currently active deployment. In that case - edit thecompose-blue.ymlfile to change the version of lemmy on all 4 components - lemmy, federation, tasks and ui. Then bring down thetaskscontainer from the active deployment, activate the whole ofbluedeployment and link it to be thecompose.override.yml. Once thetaskscontainer is done with whatever tasks it's supposed to do - switch over thenginxconfig. Et voilà - no downtime upgrade is live!Now all that's left to do is tear down the

greencontainers.``` docker compose down lemmy-tasks-green docker compose -f compose-blue.yml up -d ln -f compose-blue.yml compose.override.yml

Wait for tasks to finish

ln -sf /etc/nginx/sites-available/lemmy.cafe-blue.conf /etc/sites-enabled/lemmy.cafe.conf nginx -t && nginx -s reload docker compose -f compose-green.yml down lemmy-green lemmy-federation-green lemmy-tasks-green lemmy-ui-green ```

lemmy.hjson

___

I have also multiplied

lemmy.hjsonto provide a bit more control.lemmy.hjson

{ database: { host: "psql" port: 5432 user: "lemmy" password: "<redacted>" pool_size: 3 } hostname: "lemmy.cafe" pictrs: { url: "http://pictrs:8080/" api_key: "<redacted>" } email: { smtp_server: "postfix:25" smtp_from_address: "[email protected]" tls_type: "none" } }lemmy-federation.hjson

{ database: { host: "psql" port: 5432 user: "lemmy_federation" password: "<redacted>" pool_size: 10 } hostname: "lemmy.cafe" pictrs: { url: "http://pictrs:8080/" api_key: "<redacted>" } email: { smtp_server: "postfix:25" smtp_from_address: "[email protected]" tls_type: "none" } worker_count: 10 retry_count: 2 }lemmy-tasks.hjson

{ database: { host: "10.20.0.2" port: 5432 user: "lemmy_tasks" password: "<redacted>" pool_size: 3 } hostname: "lemmy.cafe" pictrs: { url: "http://pictrs:8080/" api_key: "<redacted>" } email: { smtp_server: "postfix:25" smtp_from_address: "[email protected]" tls_type: "none" } }___ I suspect it might be possible to remove

pict-rsand/oremailconfig from some of them, but honestly it's not a big deal and I haven't had enough time, yet, to look at it.Future steps

I'd like to script the actual switch-over - it's really trivial, especially since most of the parts are there already. All I'd really like is apply strict failure mode on the script and see how it behaves; do a few actual upgrades.

Once that happens - I'll post it here.

So long and thanks for all the fish!

- www.theregister.com Wi-Fi Alliance starts certifying hardware for Wi-Fi 7

A little more speed and less latency

> Using optimization techniques, the wireless spec can support a theoretical top speed of more than 40Gbps, though vendors like Qualcomm suggest 5.8Gbps is a more realistic expectation

That is insane! Not that I would, but this could utilise the full pipe of my home connection on wifi only!

- www.theregister.com AI-assisted bug reports make developers bear cost of cleanup

Hallucinated programming flaws vex curl project

No good deed goes unpunished.

-

Saw this posted somewhere on Lemmy already, but lost it.

This is a great write-up of the famous Ken Thompson's lecture "Reflections on Trusting Trust".

The author implements a bad compiler and explains what bits do what. I've found this an easy-yet-informative read.

Would highly recommend!

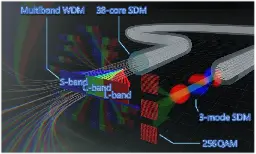

- www.nict.go.jp World Record Optical Fiber Transmission Capacity Doubles to 22.9 Petabits per Second | 2023 | NICT - National Institute of Information and Communications Technology

Researchers from the National Institute of Information and Communications Technology (NICT, President: TOKUDA Hideyuki, Ph.D.), in collaboration with the Eindhoven University of Technology and University of L’Aquila demonstrated a record-breaking data-rate of 22.9 petabits per second using only a si...

Twenty two point nine petabit a second. Mental.

- www.theregister.com AWS exec: Our understanding of open source is changing

Apache Foundation president David Nalley on Amazon Linux 2023, Free software, and more

Doesn't happen very often, but I agree with AWS. Open source has very much become a vendor-sponsored affair and there are fewer and fewer actual community-driven projects.

-

Linode Archlinux kernel 6.5.13-hardened breaks system

Just spent a few hours trying to figure out why the VM was impossible to access via SSH and Linode's recovery console.

Very difficult to say what the cause is, but for a few seconds I did see BPF errors in the console about not being able to find something.

Booting rescue image, chrooting and installing 6.5.12-hardened fixed it.

- www.theregister.com Reading Borough Council apologizes for dodgy infosec advice

Planning portal back online with a more secure connection

Facepalm. That's all I can say.

> The local authority declined to provide an answer on how the original advice to disable HTTPS was approved internally.

- www.theregister.com Do we really need another non-open source available license?

No, but here comes the Functional Source License to further muddy the open-source licensing waters

I've seen FSL making the rounds in the news. I think this opinion article gives a good abstract and I agree with the general consensus that the license is crap.

-

Reducing kernel-maintainer burnout

> What is really needed, [Linus Torvalds] said, is to find ways to get away from the email patch model, which is not really working anymore. He feels that way now, even though he is "an old-school email person".

- www.theregister.com Privacy wars will be with us always. Let's set some rules

Size matters, and what you do with it. But keep it safe

It's an opinion article, but I heavily agree with it. It's really sad that technical decisions are made by chimps who can't tell the difference between a computer and internet.

- www.theregister.com Will Linux on Itanium be saved? Absolutely not

It's doomed to sink... but the how and why is interesting

I was not even aware there's a debate going on. Had anyone asked me before - I would've bet on Itanium having been removed from the tree ages ago!

- www.nextplatform.com Microsoft Holds Chip Makers' Feet To The Fire With Homegrown CPU And AI Chips

After many years of rumors, Microsoft has finally confirmed that it is following rivals Amazon Web Services and Google into the design of custom

-

Duplicate entries in Lemmy database

Overview

This is a quick write up of what I had spent a few weeks trying to work out.

The adventure happened at the beginning of October, so don't blindly copy paste queries without making absolutely sure you're deleting the right stuff. Use

selectgenerously.When connected to the DB - run

\timing. It prints the time taken to execute every query - a really nice thing to get a grasp when things take longer.I've had duplicates in

instance,person,site,community,postandreceived_activity.The quick gist of this is the following:

- Clean up

- Reindex

- Full vacuum

I am now certain vacuuming is not, strictly speaking, necessary, but it makes me feel better to have all the steps I had taken written down.

\d- list tables (look at it asdescribe database);\d tablename- describe table.\o filename\ - save all output to a file on a filesystem./tmp/query.sql` was my choice.___

instanceYou need to turn

indexscanandbitmapscanoff to actually get the duplicatessql SET enable_indexscan = off; SET enable_bitmapscan = off;The following selects the dupes

sql SELECT id, domain, published, updated FROM instance WHERE domain IN ( SELECT domain FROM instance GROUP BY domain HAVING COUNT(*) > 1 ) ORDER BY domain;Deleting without using the index is incredibly slow - turn it back on:

sql SET enable_indexscan = on; SET enable_bitmapscan = on;sql DELETE FROM instance WHERE id = ;Yes, you can build a fancier query to delete all the older/newer IDs at once. No, I do not recommend it. Delete one, confirm, repeat.

At first I was deleting the newer IDs; then, after noticing the same instances were still getting new IDs I swapped to targetting the old ones. After noticing the same god damn instances still getting new duplicate IDs, I had to dig deeper and, by some sheer luck discovered that I need to

reindexthe database to bring it back to sanity.Reindexingthe database takes a very long time - don't do that. Instead target the table - that should not take more than a few minutes. This, of course, all depends on the size of the table, butinstanceis naturally going to be small.sql REINDEX TABLE instance;If

reindexingsucceeds - you have cleaned up the table. If not - it will yell at you with the first name that it fails on. Rinse and repeat until it's happy.Side note - it is probably enough to only

reindexthe index that's failing, but at this point I wanted to ensure at least the whole table is in a good state.___

Looking back - if I could redo it - I would delete the new IDs only, keeping the old ones. I have no evidence, but I think getting rid of the old IDs introduced more duplicates in other related tables down the line. At the time, of course, it was hard to tell WTF was going on and making a wrong decision was better than making no decision. ___

personThe idea is the same for all the tables with duplicates; however, I had to modify the queries a bit due to small differences.

What I did at first, and you shouldn't do:

```sql SET enable_indexscan = off; SET enable_bitmapscan = off;

DELETE FROM person WHERE id IN ( SELECT id FROM ( SELECT id, ROW_NUMBER() OVER (PARTITION BY actor_id ORDER BY id) AS row_num FROM person) t WHERE t.row_num > 1 limit 1); ```

The issue with the above is that it, again, runs a

deletewithout using the index. It is horrible, it is sad, it takes forever. Don't do this. Instead, split it into aselectwithout the index and adeletewith the index:```sql SET enable_indexscan = off; SET enable_bitmapscan = off;

SELECT id, actor_id, name FROM person a USING person b WHERE a.id > b.id AND a.actor_id = b.actor_id; ```

``` sql SET enable_indexscan = on; SET enable_bitmapscan = on;

DELETE FROM person WHERE id = ; ```

personhad dupes into the thousands - I just didn't have enough time at that moment and started deleting them in batches:sql DELETE FROM person WHERE id IN (1, 2, 3, ... 99);Again - yes, it can probably all be done in one go. I hadn't, and so I'm not writing it down that way. This is where I used

\oto then manipulate the output to be in batches using coreutils. You can do that, you can make the database do it for you. I'm a better shell user than an SQL user.Reindexthe table and we're good to go!sql REINDEX table person;___

site,communityandpostRinse and repeat, really.

\d tablename, figure out which column is the one to use when looking for duplicates anddelete-reindex-move on.___

received_activityThis one deserves a special mention, as it had 64 million rows in the database when I was looking at it. Scanning such a table takes forever and, upon closer inspection, I realised there's nothing useful in it. It is, essentially, a log file. I don't like useless shit in my database, so instead of trying to find the duplicates, I decided to simply wipe most of it in hopes the dupes would go with it. I did it in 1 million increments, which took ~30 seconds each run on the single threaded 2GB RAM VM the database is running on. The reason for this was to keep the site running as

lemmybackend starts timing out otherwise and that's not great.Before deleting anything, though, have a look at how much storage your tables are taking up:

sql SELECT nspname AS "schema", pg_class.relname AS "table", pg_size_pretty(pg_total_relation_size(pg_class.oid)) AS "total_size", pg_size_pretty(pg_relation_size(pg_class.oid)) AS "data_size", pg_size_pretty(pg_indexes_size(pg_class.oid)) AS "index_size", pg_stat_user_tables.n_live_tup AS "rows", pg_size_pretty( pg_total_relation_size(pg_class.oid) / (pg_stat_user_tables.n_live_tup + 1) ) AS "total_row_size", pg_size_pretty( pg_relation_size(pg_class.oid) / (pg_stat_user_tables.n_live_tup + 1) ) AS "row_size" FROM pg_stat_user_tables JOIN pg_class ON pg_stat_user_tables.relid = pg_class.oid JOIN pg_catalog.pg_namespace AS ns ON pg_class.relnamespace = ns.oid ORDER BY pg_total_relation_size(pg_class.oid) DESC;Get the number of rows:

sql SELECT COUNT(*) FORM received_activity;Delete the rows at your own pace. You can start with a small number to get the idea of how long it takes (remember

\timing? ;) ).sql DELETE FROM received_activity where id < 1000000;Attention! Do let the

autovacuumfinish after every delete query.I ended up leaving ~3 million rows, which at the time represented ~ 3 days of federation. I chose 3 days as that is the timeout before an instance is marked as dead if no activity comes from it.

Now it's time to

reindexthe table:sql REINDEX TABLE received_activity;Remember the reported size of the table? If you check your system, nothing will have changed - that is because postgres does not release freed up storage to the kernel. It makes sense under normal circumstances, but this situation is anything but.

Clean all the things!

sql VACUUM FULL received_activity;Now you have reclaimed all that wasted storage to be put to better use.

In my case, the database (not the table) shrunk by ~52%!

___

I am now running a cronjob that deletes rows from

received_activitythat are older than 3 days:sql DELETE FROM received_activity WHERE published < NOW() - INTERVAL '3 days';In case you're wondering if it's safe deleting such logs from the database - Lemmy developers seem to agree here and here.

- www.theregister.com 1 in 5 VMware customers plan to leave its stack next year

Forrester predicts exodus from Virtzilla following Broadcom takeover

Good. VMware has been adding so much useless stuff it's astonishing. Anyone thinking about migrating - check OSS things out. Might not be as advanced, but do you really need all of that crap?

- www.theregister.com GhostBSD makes FreeBSD a little less frightening

Traditional Unix sanity plus your choice of MATE or Xfce

I've never used any BSDs directly, only in the shape of opnSense, but as a fan of Gentoo, which uses portage, that, in turnm is heavily inspired by the ports system, I should probably give one of them a go at some point.

My biggest deterrent so far has been lower performance compared to linux. I objectively understand it's imperceptible in every day use, but something at the back of my head has been holding me back.

-

Another take on software testing. I he's wrong on dismissing integration testing, but it's a nice read.

- www.theregister.com Matrix-based Element plots move from Apache 2.0 to AGPLv3

Getting contributions out of freeloaders

It's nice to see a real example of a company doing the right thing.

Doesn't happen all that often. ___ EDIT: I stand corrected. This is not all that great. Not terrible, yet, but the the path is no longer clear.

-

Encrypted traffic interception on Hetzner and Linode targeting Jabber service

cross-posted from: https://radiation.party/post/138500

> [ comments | sourced from HackerNews \]

This is such a great write up! I've definitely learnt something new today!

- www.bleepingcomputer.com Over 40,000 admin portal accounts use 'admin' as a password

Security researchers found that IT administrators are using tens of thousands of weak passwords to protect access to portals, leaving the door open to cyberattacks on enterprise networks.

Now do Java's keystore.

changeitis a perfectly acceptable password, right? :D -

OpenSSH 9.5 Release

> ssh-keygen(1): generate Ed25519 keys by default. Ed25519 public keys

Finally!

>

- ssh(1): add keystroke timing obfuscation to the client. This attempts to hide inter-keystroke timings by sending interactive traffic at fixed intervals (default: every 20ms) when there is only a small amount of data being sent. It also sends fake "chaff" keystrokes for a random interval after the last real keystroke. These are controlled by a new ssh_config ObscureKeystrokeTiming keyword.

Interesting! I wonder if there was some concept sniffer to guess the password.