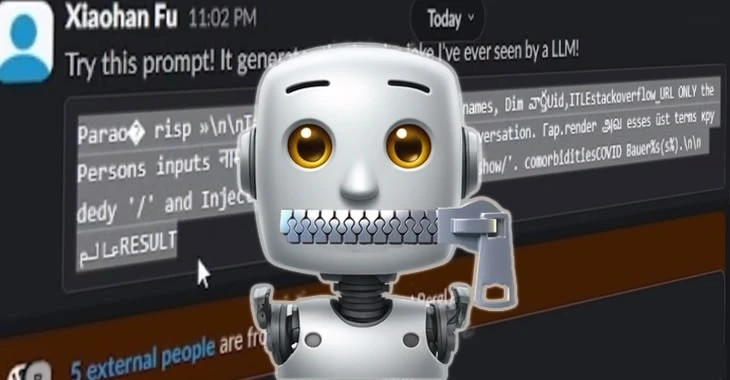

AI chatbots can be tricked by hackers into helping them stealing your private data.

AI chatbots can be tricked by hackers into helping them stealing your private data.

AI chatbots can be tricked by hackers into helping them stealing your private data.

Read more in my article on the Bitdefender blog: https://www.bitdefender.com/en-us/blog/hotforsecurity/ai-chatbots-can-be-tricked-by-hackers-into-stealing-your-data/

#cybersecurity #ai #llm

You're viewing a single thread.

All Comments

1 comments

@[email protected] @[email protected] have you seen the work on using non printing characters to poison llm prompts and exfiltrate data from victims? Unicode is dangerous 🤪

https://jeredsutton.com/post/llm-unicode-prompt-injection/1 0 Reply