Non-Trivial AI

-

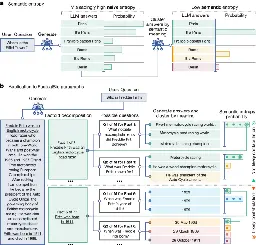

Detecting hallucinations in large language models using semantic entropy

www.nature.com Detecting hallucinations in large language models using semantic entropy - NatureHallucinations (confabulations) in large language model systems can be tackled by measuring uncertainty about the meanings of generated responses rather than the text itself to improve question-answering accuracy.

>Abstract > >Large language model (LLM) systems, such as ChatGPT1 or Gemini2, can show impressive reasoning and question-answering capabilities but often ‘hallucinate’ false outputs and unsubstantiated answers3,4. Answering unreliably or without the necessary information prevents adoption in diverse fields, with problems including fabrication of legal precedents5 or untrue facts in news articles6 and even posing a risk to human life in medical domains such as radiology7. Encouraging truthfulness through supervision or reinforcement has been only partially successful8. Researchers need a general method for detecting hallucinations in LLMs that works even with new and unseen questions to which humans might not know the answer. Here we develop new methods grounded in statistics, proposing entropy-based uncertainty estimators for LLMs to detect a subset of hallucinations—confabulations—which are arbitrary and incorrect generations. Our method addresses the fact that one idea can be expressed in many ways by computing uncertainty at the level of meaning rather than specific sequences of words. Our method works across datasets and tasks without a priori knowledge of the task, requires no task-specific data and robustly generalizes to new tasks not seen before. By detecting when a prompt is likely to produce a confabulation, our method helps users understand when they must take extra care with LLMs and opens up new possibilities for using LLMs that are otherwise prevented by their unreliability.

I am not entirely sure if this research article falls within the community's scope, so feel free to remove it if you consider it does not.

-

AI Ruined My Year

YouTube Video

Click to view this content.

Robert Miles discusses recent developments in AI Safety

- www.quantamagazine.org Elliptic Curve ‘Murmurations’ Found With AI Take Flight | Quanta Magazine

Mathematicians are working to fully explain unusual behaviors uncovered using artificial intelligence.

-

The use of Mathematical Programming with Artificial Intelligence and Expert Systems (McBride, O'Leary; 1993) [PDF, 15 pages]

>Abstract: > >Researchers have developed artificially intelligent (AI) and expert systems (ES) to assist in the formulation, solution and interpretation of generic mathematical programs (MP). In addition, researchers also have built domain-specific systems either modeled around a mathematical program or which include a mathematical program module. In these systems, the specificity of the domain allows researchers to extend the interpretation or formulation beyond that available from the generic set of assumptions about mathematical programming. Further, researchers have begun to investigate the use of mathematical program formulations of expert systems. The purpose of their research has been to, e.g., understand the complexity of the expert systems and also to examine the feasibility of mathematical programming as an alternative solution methodology for those expert systems. This paper surveys and extends some of that literature that integrates AIlES and MP, and elicits some of the current research issues of concern.

-

Concrete Problems in AI Safety Part 2: Empowerment

YouTube Video

Click to view this content.

>Maybe AI systems would be safer if they avoid gaining too much control over their environment? How might that work?

-

AI/ML+Physics Part 2: Curating Training Data

YouTube Video

Click to view this content.

>This video discusses the second stage of the machine learning process: (2) collecting and curating training data to inform the model. There are opportunities to incorporate physics into this stage of the process, such as data augmentation to incorporate known symmetries.

-

Concrete Problems in AI Safety (Dario Amodei, Chris Olah, Jacob Steinhardt, Paul Christiano, John Schulman, Dan Mané 2016)

Abstract:

>Rapid progress in machine learning and artificial intelligence (AI) has brought increasing attention to the potential impacts of AI technologies on society. In this paper we discuss one such potential impact: the problem of accidents in machine learning systems, defined as unintended and harmful behavior that may emerge from poor design of real-world AI systems. We present a list of five practical research problems related to accident risk, categorized according to whether the problem originates from having the wrong objective function ("avoiding side effects" and "avoiding reward hacking"), an objective function that is too expensive to evaluate frequently ("scalable supervision"), or undesirable behavior during the learning process ("safe exploration" and "distributional shift"). We review previous work in these areas as well as suggesting research directions with a focus on relevance to cutting-edge AI systems. Finally, we consider the high-level question of how to think most productively about the safety of forward-looking applications of AI.

-

Concrete Problems in AI Safety Part 1: Avoiding Negative Side-Effects

YouTube Video

Click to view this content.

>We can expect AI systems to accidentally create serious negative side effects - how can we avoid that? >The first of several videos about the paper "Concrete Problems in AI Safety".

-

Computerphile: Discussion with Robert Miles about Generality in Artificial Intelligence

YouTube Video

Click to view this content.

A brief overview of the concept of generality in AI systems.

-

Avoiding Fusion Plasma Tearing Instability with Deep Reinforcement Learning

www.nature.com Avoiding fusion plasma tearing instability with deep reinforcement learning - NatureArtificial intelligence control is used to avoid the emergence of disruptive tearing instabilities in the magnetically confined fusion plasma in the DIII-D tokamak reactor.

Abstract

For stable and efficient fusion energy production using a tokamak reactor, it is essential to maintain a high-pressure hydrogenic plasma without plasma disruption. Therefore, it is necessary to actively control the tokamak based on the observed plasma state, to manoeuvre high-pressure plasma while avoiding tearing instability, the leading cause of disruptions. This presents an obstacle-avoidance problem for which artificial intelligence based on reinforcement learning has recently shown remarkable performance. However, the obstacle here, the tearing instability, is difficult to forecast and is highly prone to terminating plasma operations, especially in the ITER baseline scenario. Previously, we developed a multimodal dynamic model that estimates the likelihood of future tearing instability based on signals from multiple diagnostics and actuators. Here we harness this dynamic model as a training environment for reinforcement-learning artificial intelligence, facilitating automated instability prevention. We demonstrate artificial intelligence control to lower the possibility of disruptive tearing instabilities in DIII-D, the largest magnetic fusion facility in the United States. The controller maintained the tearing likelihood under a given threshold, even under relatively unfavourable conditions of low safety factor and low torque. In particular, it allowed the plasma to actively track the stable path within the time-varying operational space while maintaining H-mode performance, which was challenging with traditional preprogrammed control. This controller paves the path to developing stable high-performance operational scenarios for future use in ITER.

-

AI/ML+Physics Part 1: Choosing what to model

YouTube Video

Click to view this content.

> This video discusses the first stage of the machine learning process [identified in the previous post]: (1) formulating a problem to model. There are lots of opportunities to incorporate physics into this process, and learn new physics by applying ML to the right problem.

-

Physics Informed Machine Learning: High Level Overview of AI and ML in Science and Engineering

YouTube Video

Click to view this content.

>This video describes how to incorporate physics into the machine learning process. The process of machine learning is broken down into five stages: (1) formulating a problem to model, (2) collecting and curating training data to inform the model, (3) choosing an architecture with which to represent the model, (4) designing a loss function to assess the performance of the model, and (5) selecting and implementing an optimization algorithm to train the model. At each stage, we discuss how prior physical knowledge may be embedding into the process.

>Physics informed machine learning is critical for many engineering applications, since many engineering systems are governed by physics and involve safety critical components. It also makes it possible to learn more from sparse and noisy data sets.